Welcome to Trial Submission Studio

Transform clinical trial data into FDA-compliant CDISC formats with confidence.

Trial Submission Studio is a free, open-source desktop application for transforming clinical trial source data (CSV) into CDISC-compliant submission formats.

Caution

ALPHA SOFTWARE - ACTIVE DEVELOPMENT

Trial Submission Studio is currently in early development. Features are incomplete, APIs may change, and bugs are expected. Do not use for production regulatory submissions.

Always validate all outputs with qualified regulatory professionals before submission to regulatory authorities.

See It in Action

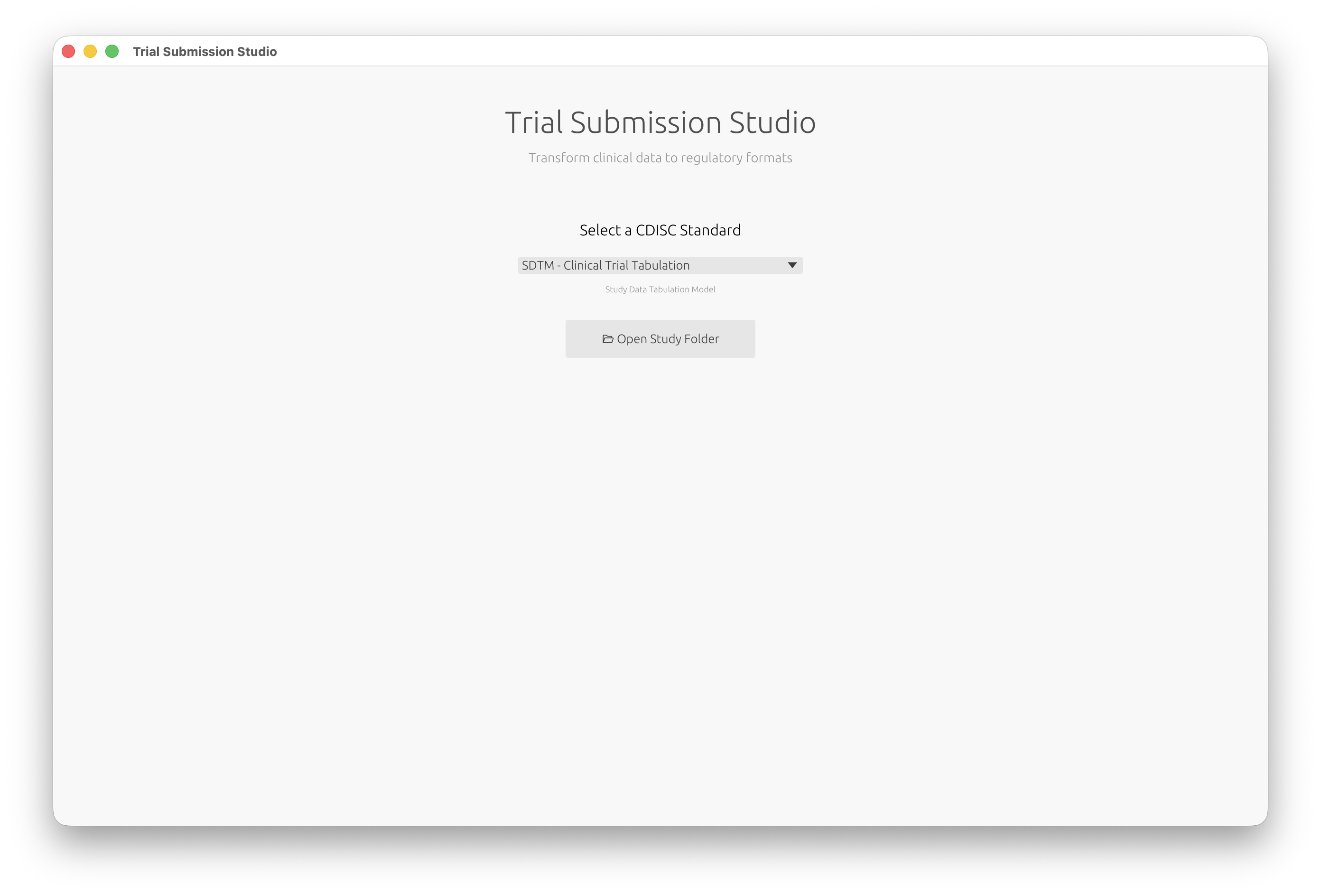

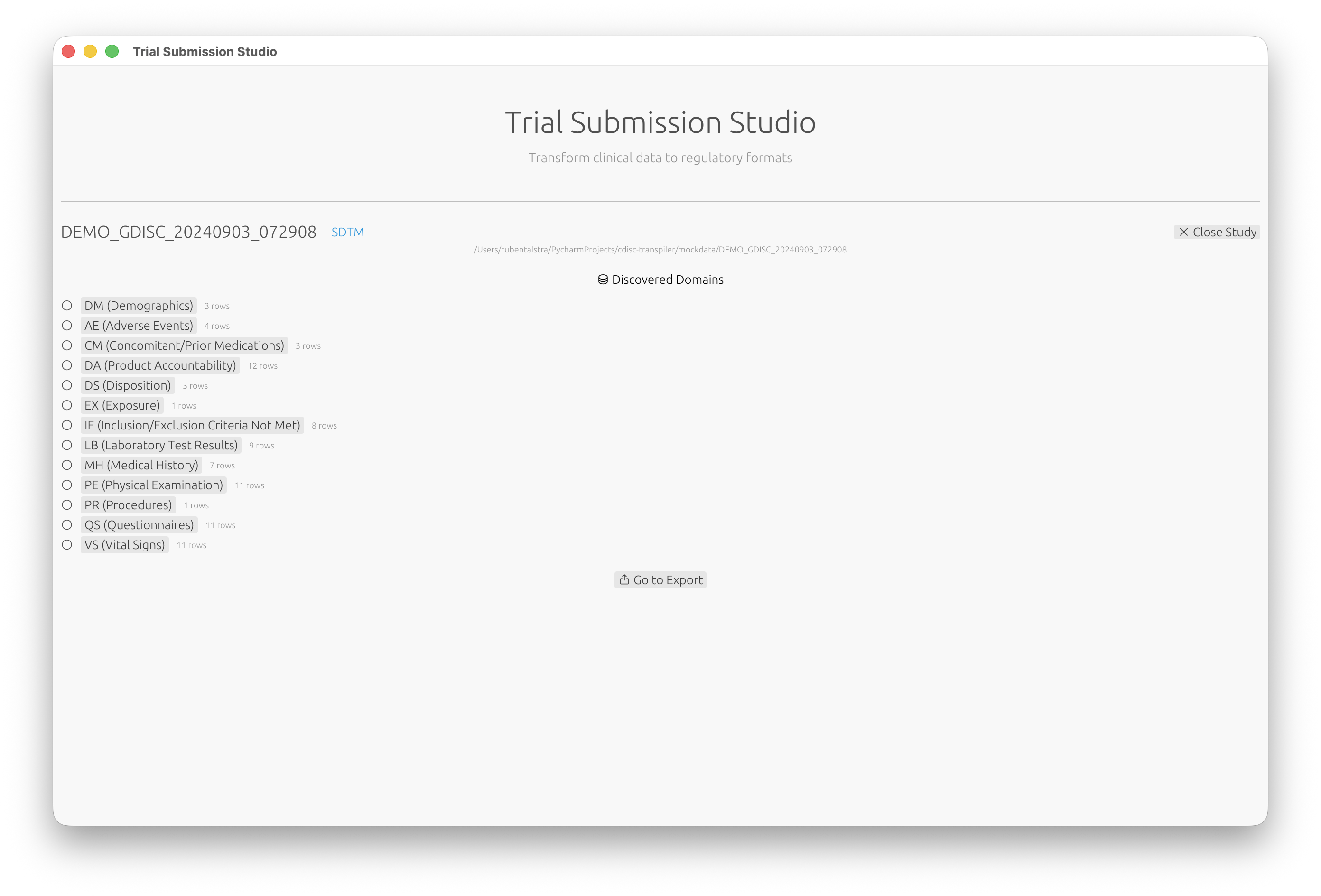

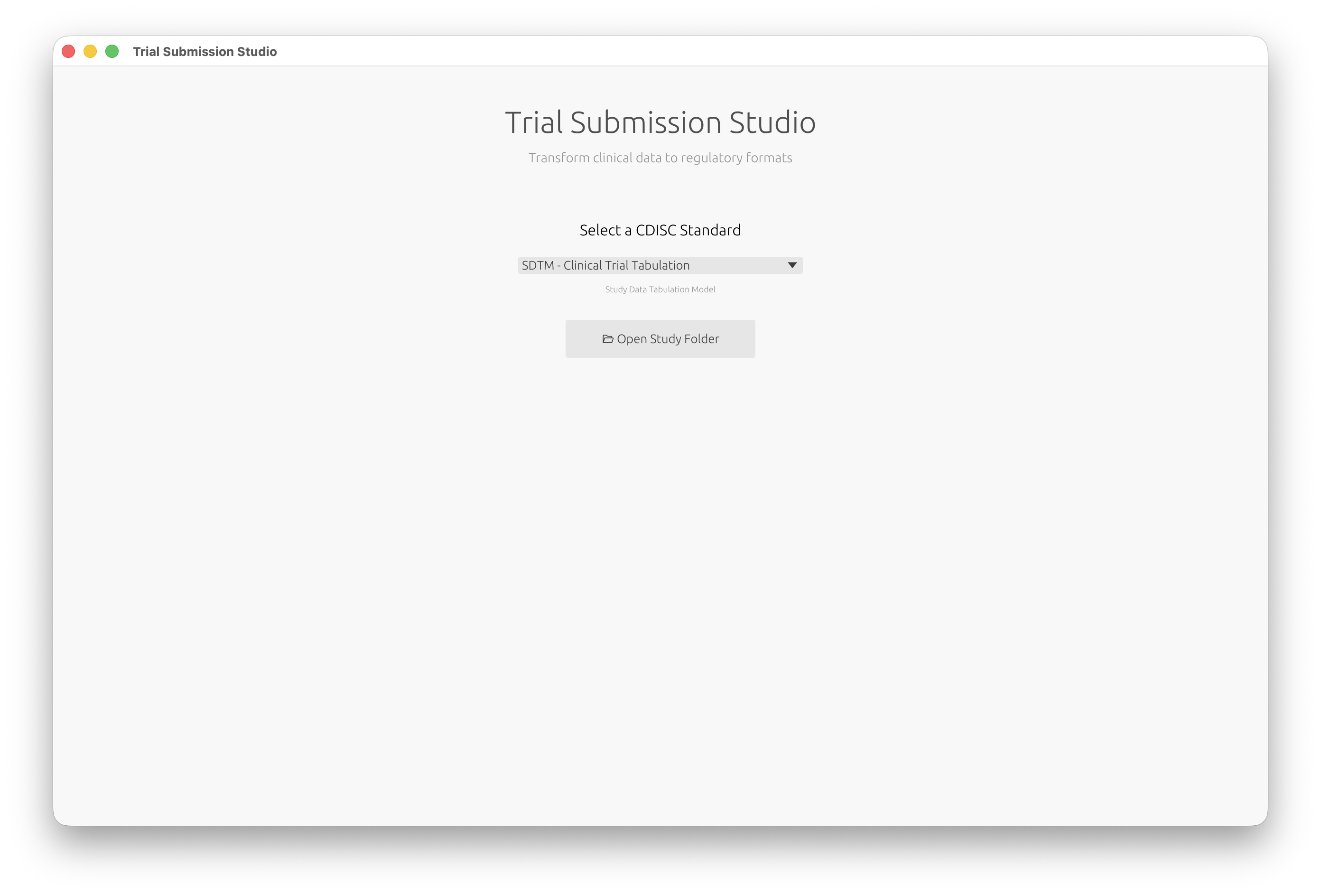

Select your CDISC standard and open your study data:

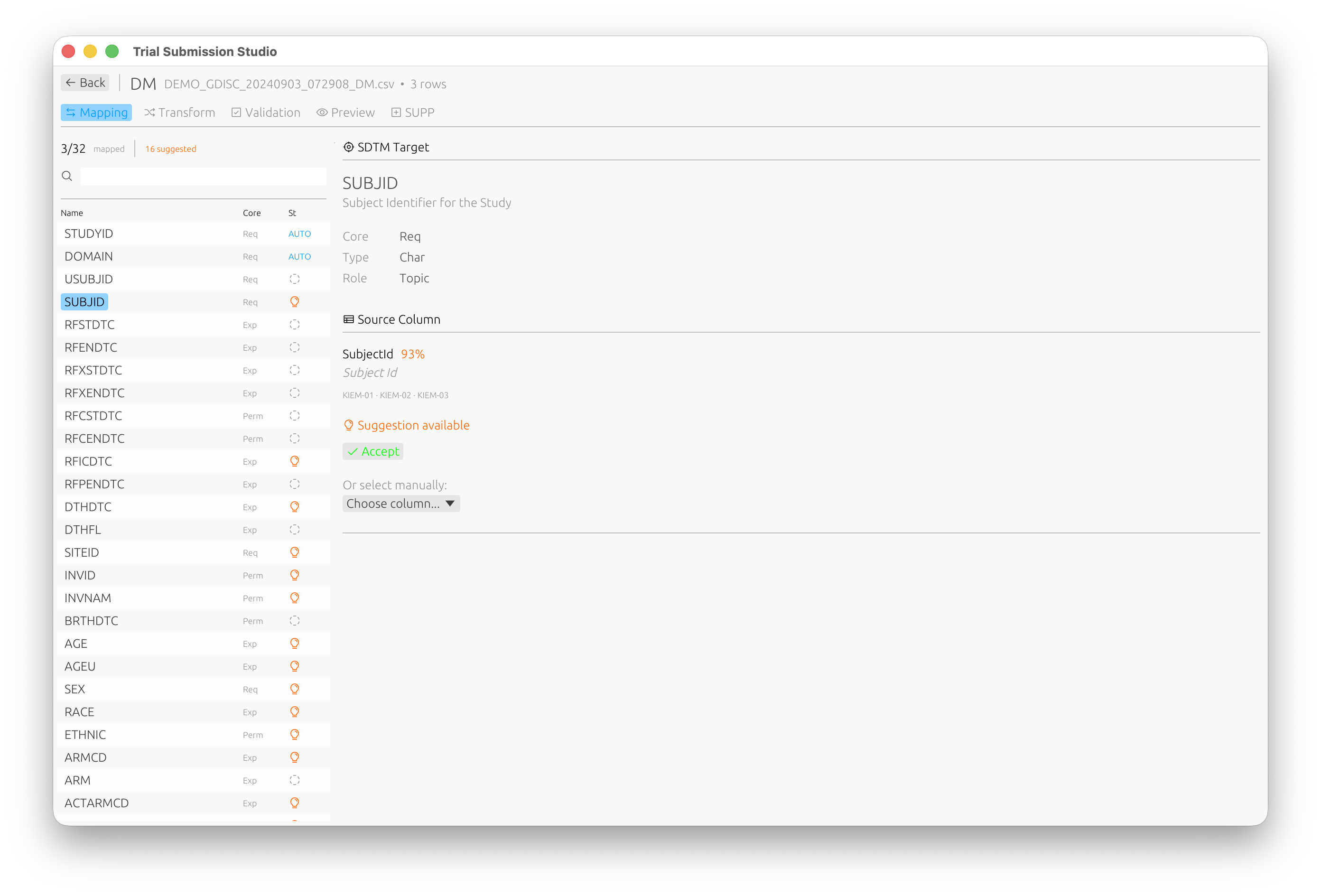

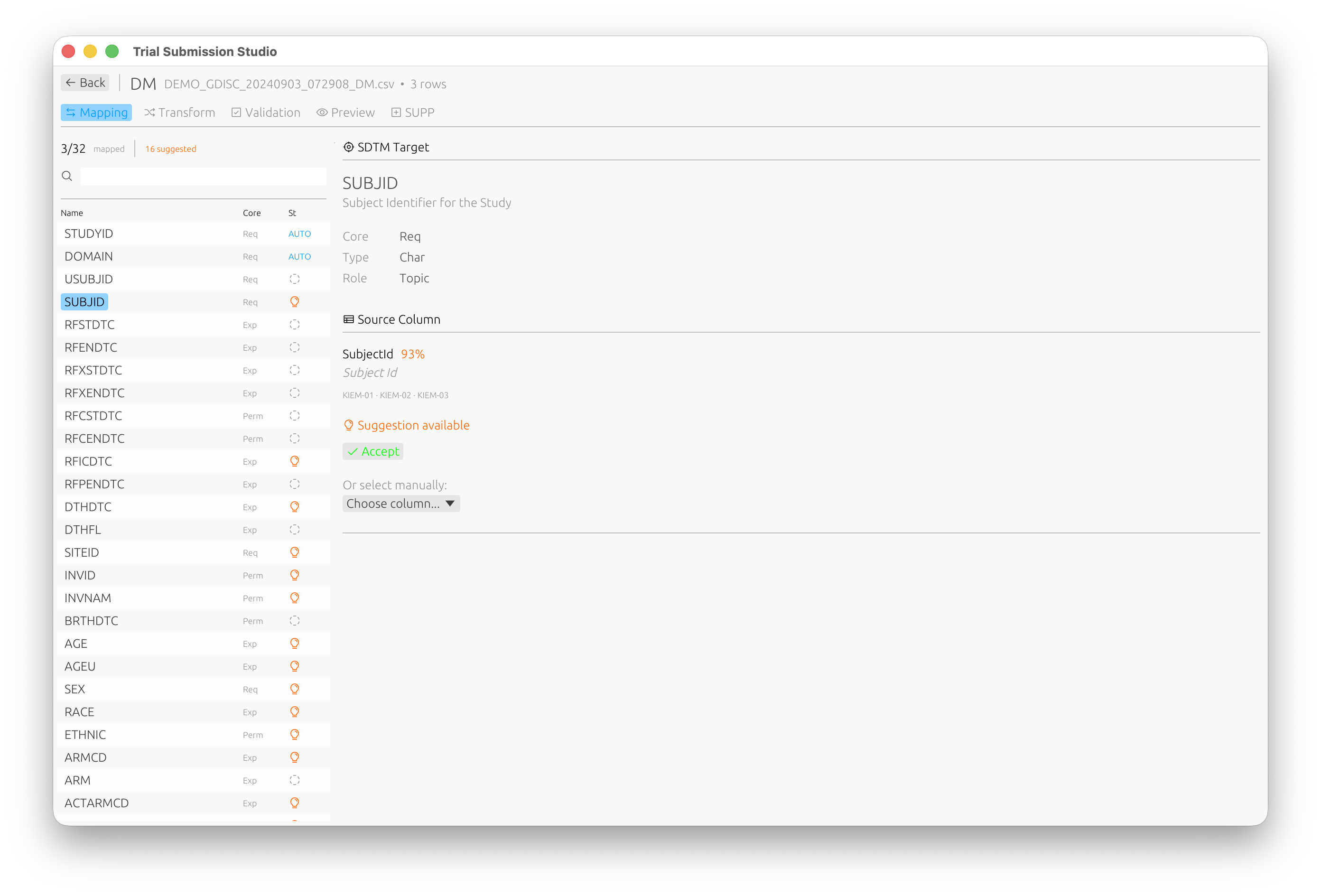

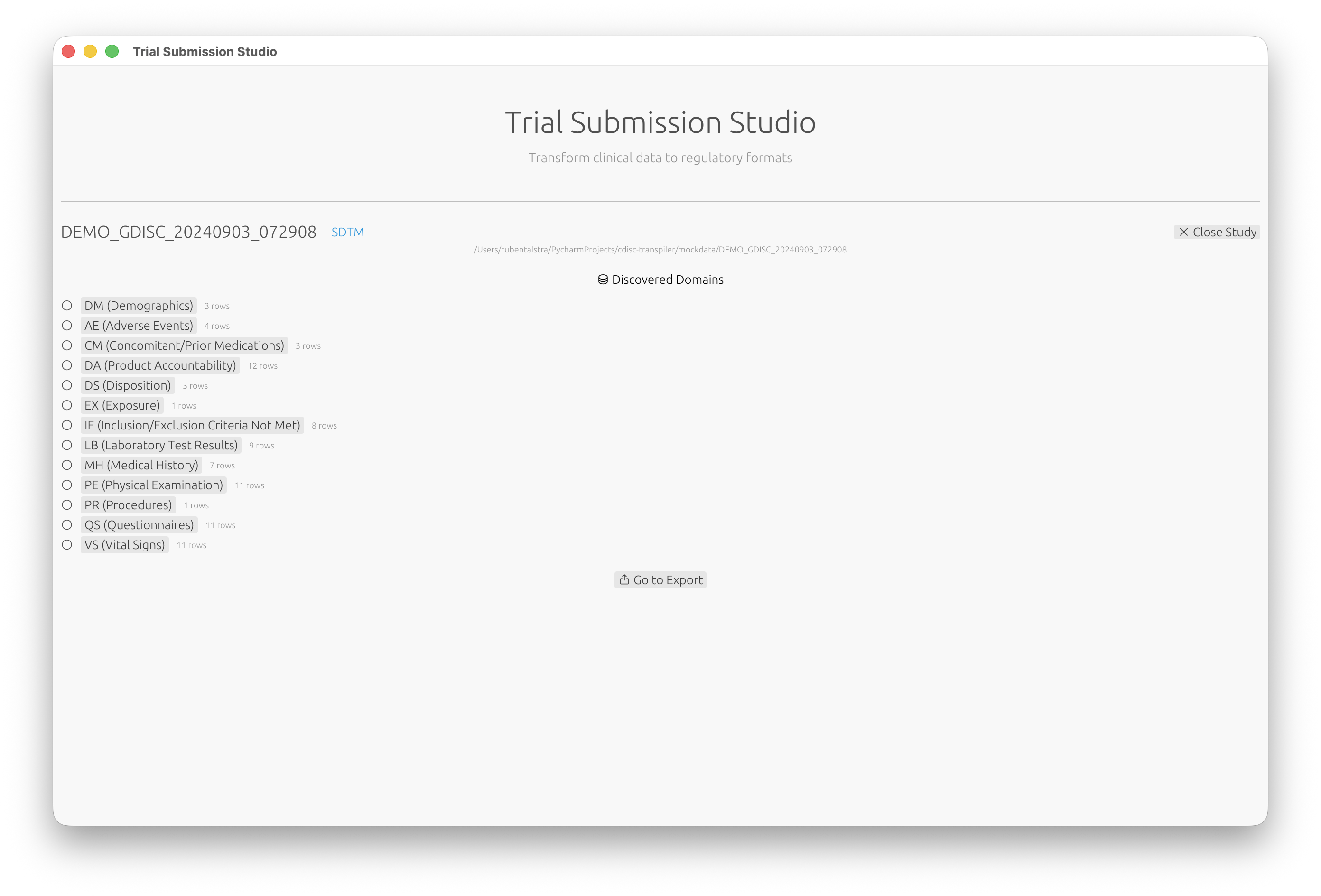

Automatic domain discovery with intelligent column mapping:

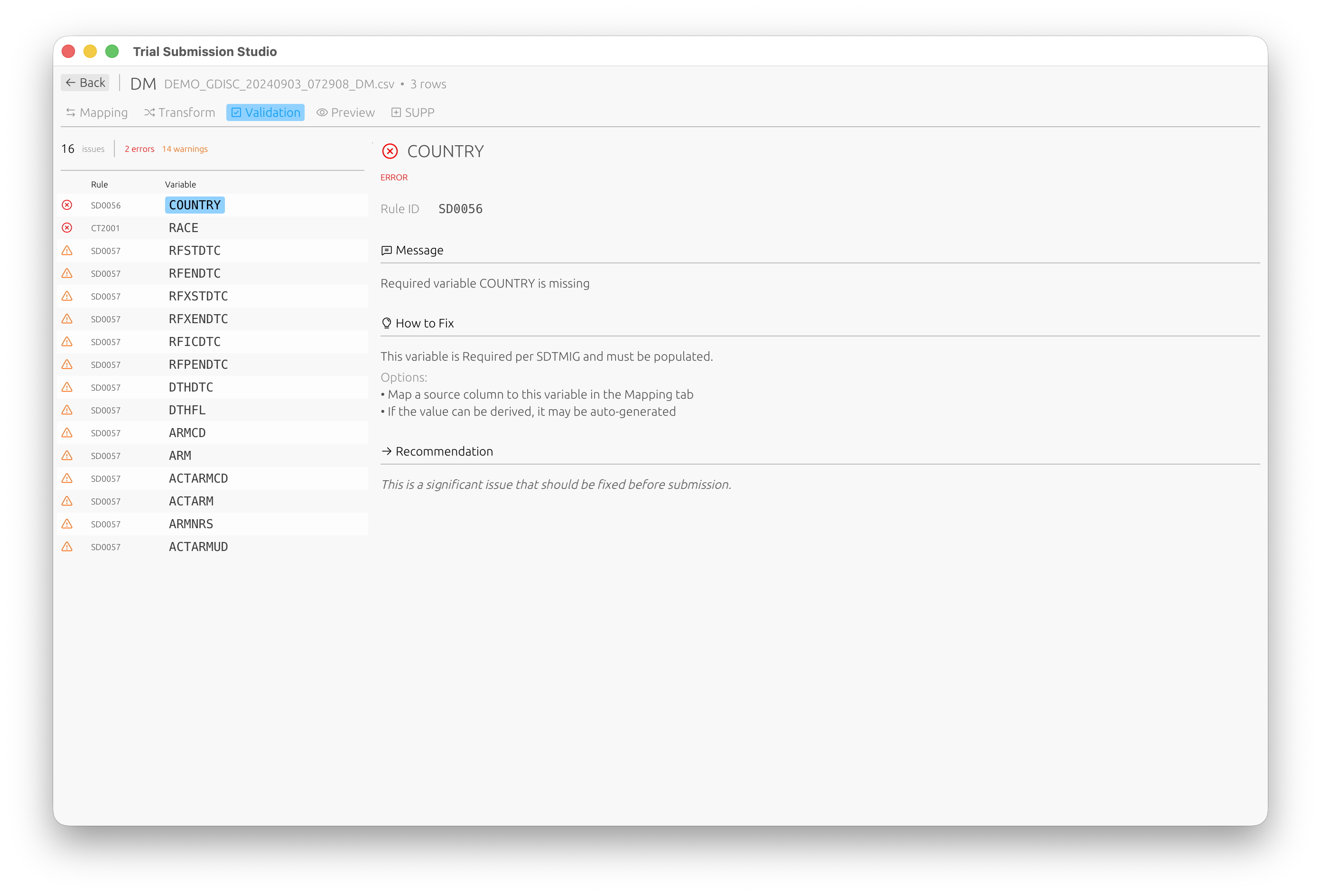

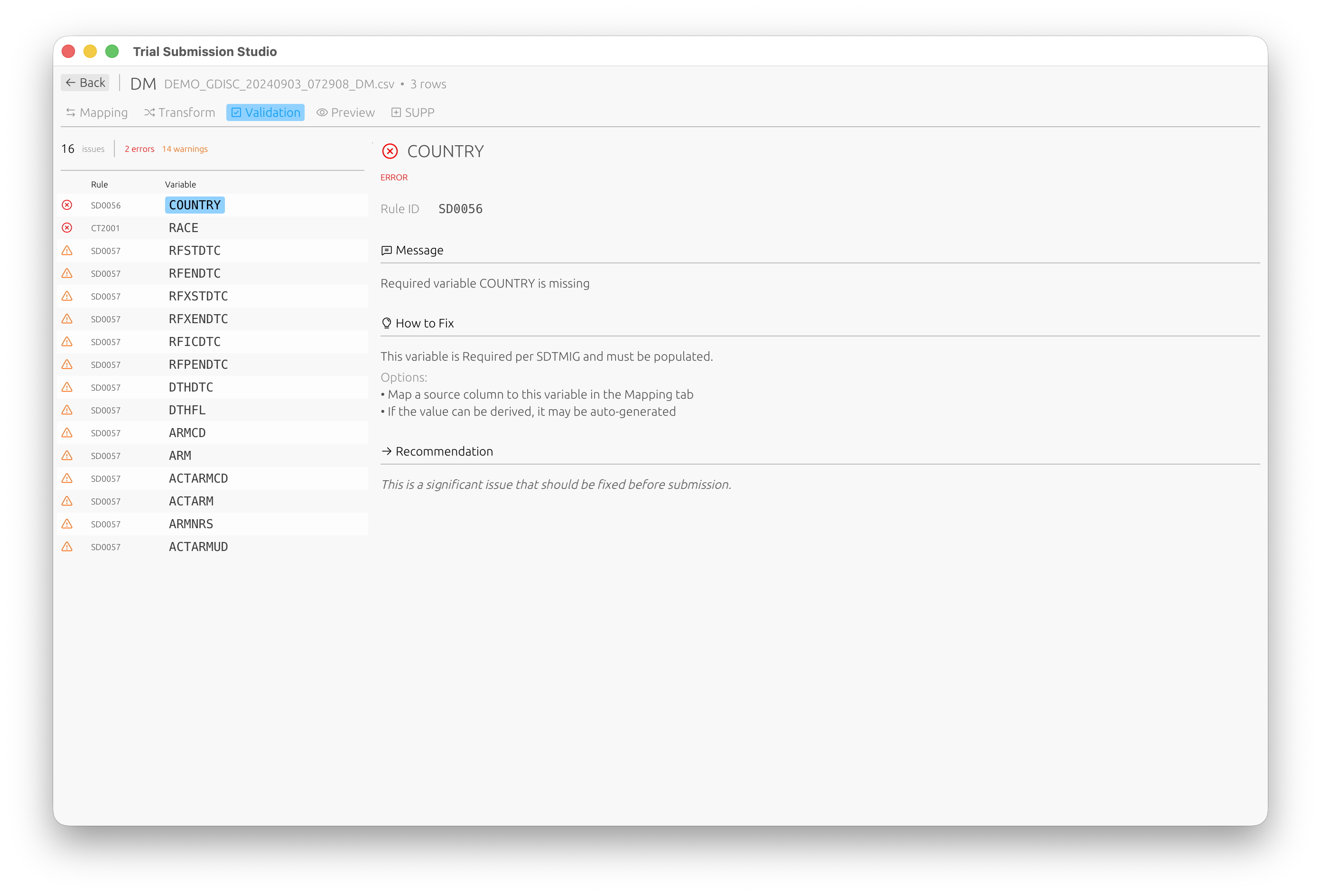

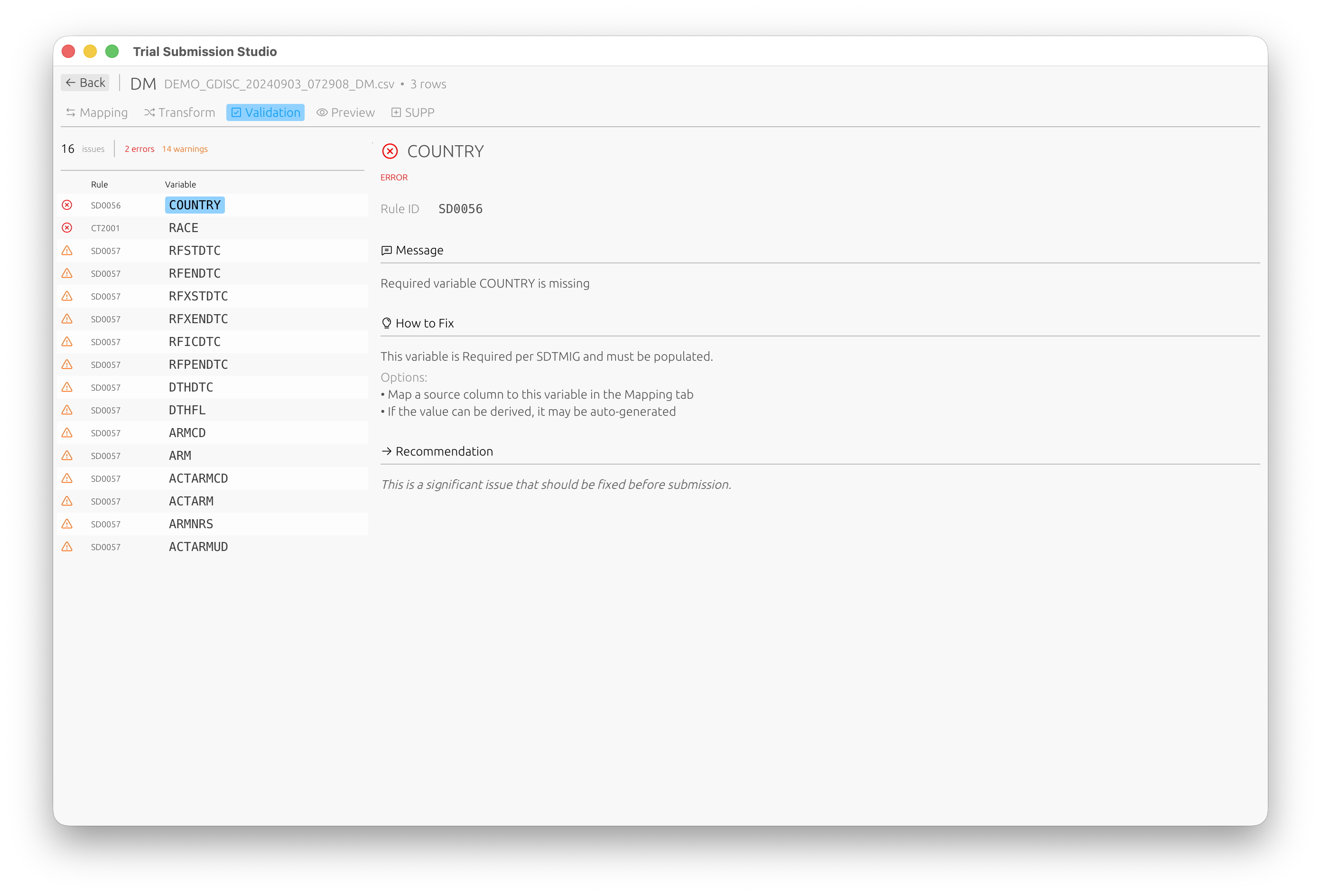

Built-in validation against CDISC standards:

Quick Links

| New Users | Clinical Programmers | Developers |

|---|---|---|

| Installation | User Guide | Architecture |

| Quick Start | CDISC Standards | Contributing |

| System Requirements | Output Formats | Development Setup |

Key Features

| Feature | Description |

|---|---|

| Multi-format Output | XPT V5/V8, Dataset-XML, Define-XML 2.1 |

| Intelligent Mapping | Fuzzy matching for automatic column-to-variable mapping |

| CDISC Validation | Built-in controlled terminology validation |

| Cross-platform | Native GUI for macOS, Windows, and Linux |

| Offline Operation | All CDISC standards embedded locally |

Supported Standards

Currently Supported:

- SDTM-IG v3.4

- Controlled Terminology (2024-2025 versions)

Planned:

- ADaM-IG v1.3

- SEND-IG v3.1.1

Getting Help

- Documentation: You’re reading it! Use the sidebar to navigate.

- Issues: Report bugs on GitHub

- Discussions: Ask questions on GitHub Discussions

License

Trial Submission Studio is open source software licensed under the MIT License.

Installation

Download the latest release for your platform from our GitHub Releases page.

Download Options

| Platform | Architecture | Format | Download |

|---|---|---|---|

| macOS | Apple Silicon (M1/M2/M3+) | .dmg or .zip | Download |

| macOS | Intel (x86_64) | .dmg or .zip | Download |

| Windows | x86_64 (64-bit) | .zip | Download |

| Windows | ARM64 | .zip | Download |

| Linux | x86_64 (64-bit) | .tar.gz | Download |

Verifying Your Download

Each release includes SHA256 checksum files (.sha256) for security

verification.

macOS/Linux

# Download the checksum file and binary, then verify

shasum -a 256 -c trial-submission-studio-*.sha256

Windows (PowerShell)

# Compare the checksum

Get-FileHash trial-submission-studio-*.zip -Algorithm SHA256

Platform-Specific Instructions

macOS

- Download the

.dmgfile for your architecture - Open the

.dmgfile - Drag Trial Submission Studio to your Applications folder

- On first launch, you may need to right-click and select “Open” to bypass Gatekeeper

[!TIP] Which version do I need?

Click the Apple menu () > About This Mac:

- Chip: Apple M1/M2/M3 → Download the Apple Silicon version

- Processor: Intel → Download the Intel version

Windows

- Download the

.zipfile for your architecture - Extract the archive to your preferred location

- Run

trial-submission-studio.exe

Linux

- Download the

.tar.gzfile - Extract:

tar -xzf trial-submission-studio-*.tar.gz - Run:

./trial-submission-studio

Uninstalling

Trial Submission Studio is a portable application that does not modify system settings or registry entries.

Windows

- Delete the extracted folder containing

trial-submission-studio.exe - Optionally delete settings from

%APPDATA%\trial-submission-studio\

macOS

- Drag Trial Submission Studio from Applications to Trash

- Optionally delete settings from

~/Library/Application Support/trial-submission-studio/

Linux

- Delete the AppImage file or extracted folder

- Optionally delete settings from

~/.config/trial-submission-studio/

Next Steps

- Quick Start Guide - Get up and running in 5 minutes

- System Requirements - Verify your system meets the requirements

- Building from Source - For developers who want to compile from source

Quick Start Guide

Get up and running with Trial Submission Studio in 5 minutes.

Overview

This guide walks you through the basic workflow:

flowchart LR

A["Import CSV"] --> B["Map Columns"]

B --> C["Validate"]

C --> D["Export"]

style A fill:#4a90d9,color:#fff

style D fill:#50c878,color:#fff

- Import your source CSV data

- Map columns to SDTM variables

- Validate against CDISC standards

- Export to XPT format

Step 1: Launch the Application

After installing Trial Submission Studio, launch the application:

- macOS: Open from Applications folder

- Windows: Run

trial-submission-studio.exe - Linux: Run

./trial-submission-studio

You’ll see the welcome screen where you can select your CDISC standard:

Step 2: Import Your Data

- Click Open Study Folder and select your data folder

- Trial Submission Studio will automatically:

- Detect column types

- Identify potential SDTM domains

- Parse date formats

Tip

Your data should have column headers in the first row.

Step 3: Review Discovered Domains

Trial Submission Studio automatically discovers domains from your source data:

- Review the list of discovered domains (DM, AE, VS, etc.)

- Click on a domain to configure its mappings

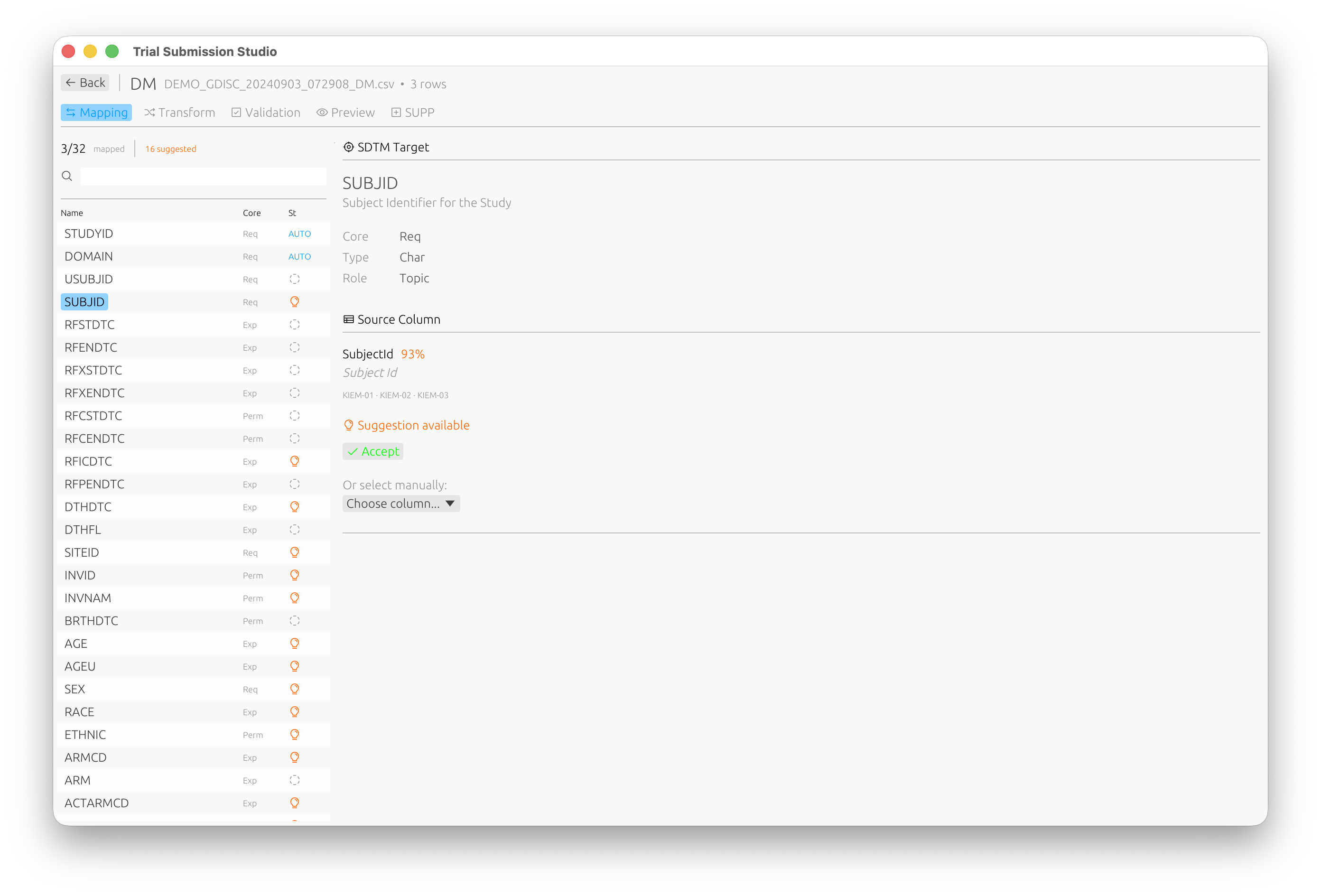

Step 4: Map Columns

- Review the suggested column mappings

- For each source column, select the corresponding SDTM variable

- Use the fuzzy matching suggestions to speed up mapping

The mapping interface shows:

- Source Column: Your CSV column name

- Target Variable: The SDTM variable

- Match Score: Confidence of the suggested mapping (e.g., 93% match)

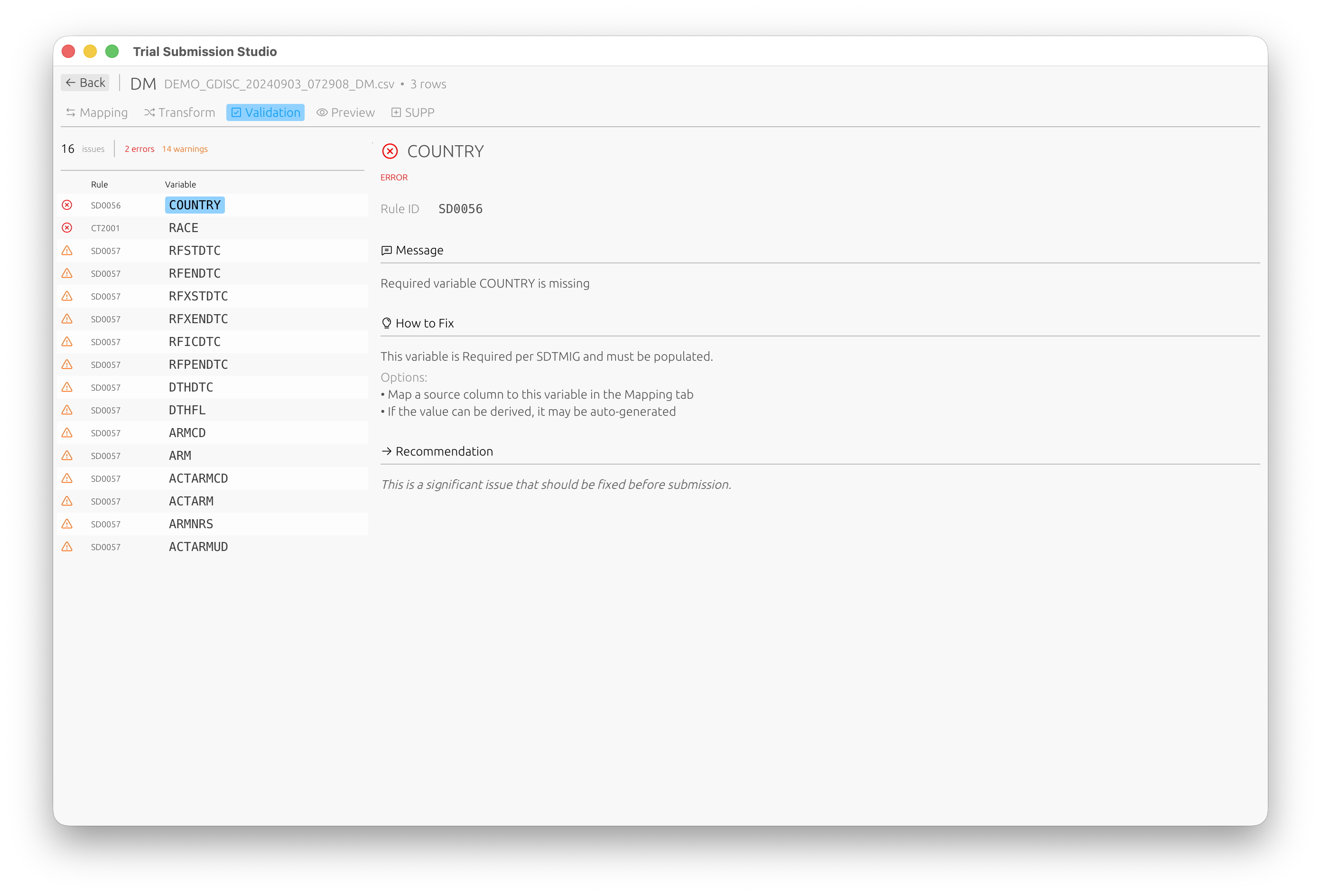

Step 5: Validate

- Switch to the Validation tab to check your data against CDISC rules

- Review any validation messages:

- Errors: Must be fixed before export

- Warnings: Should be reviewed

- Info: Informational messages

Each validation issue includes the rule ID, a description, and suggestions on how to fix it.

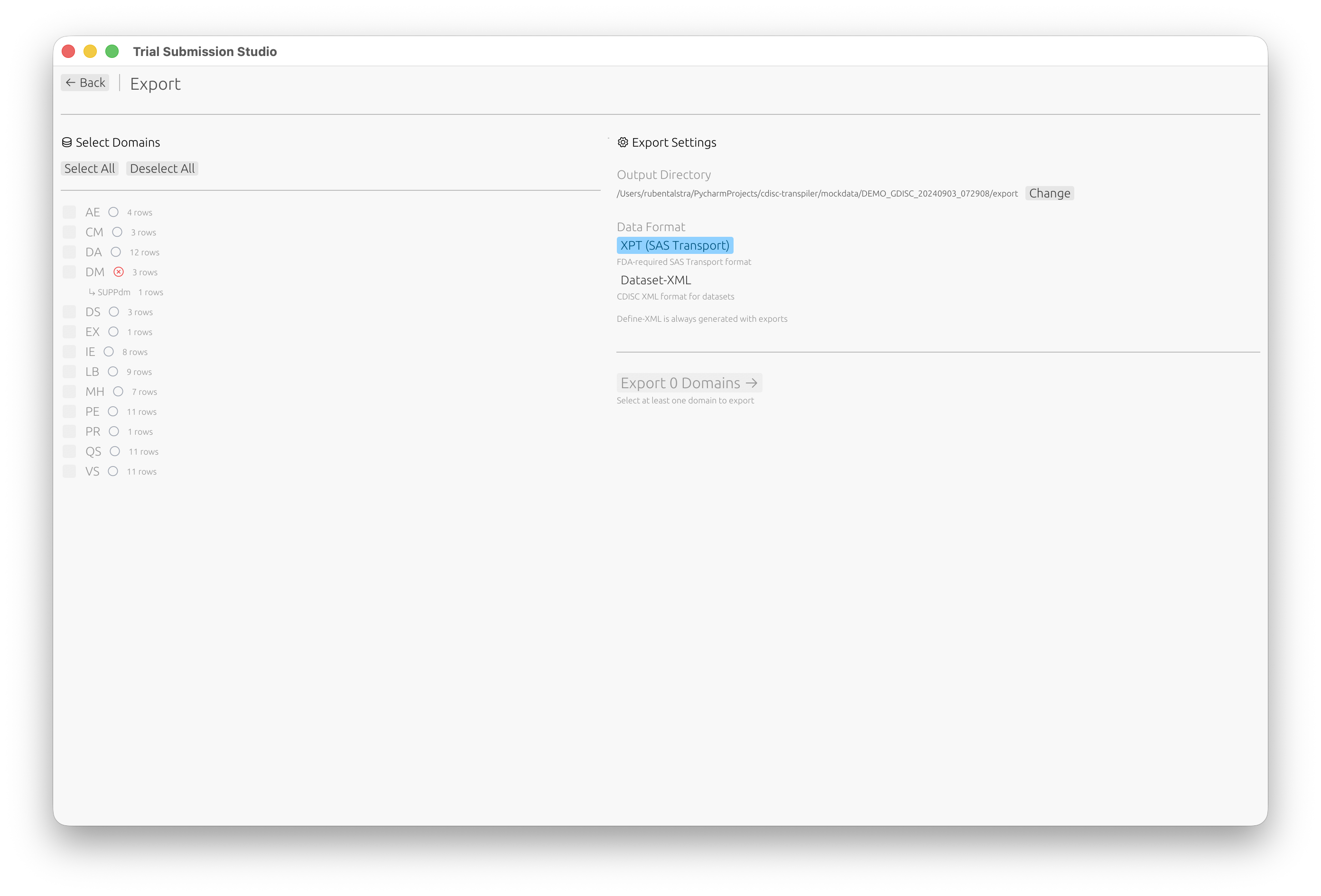

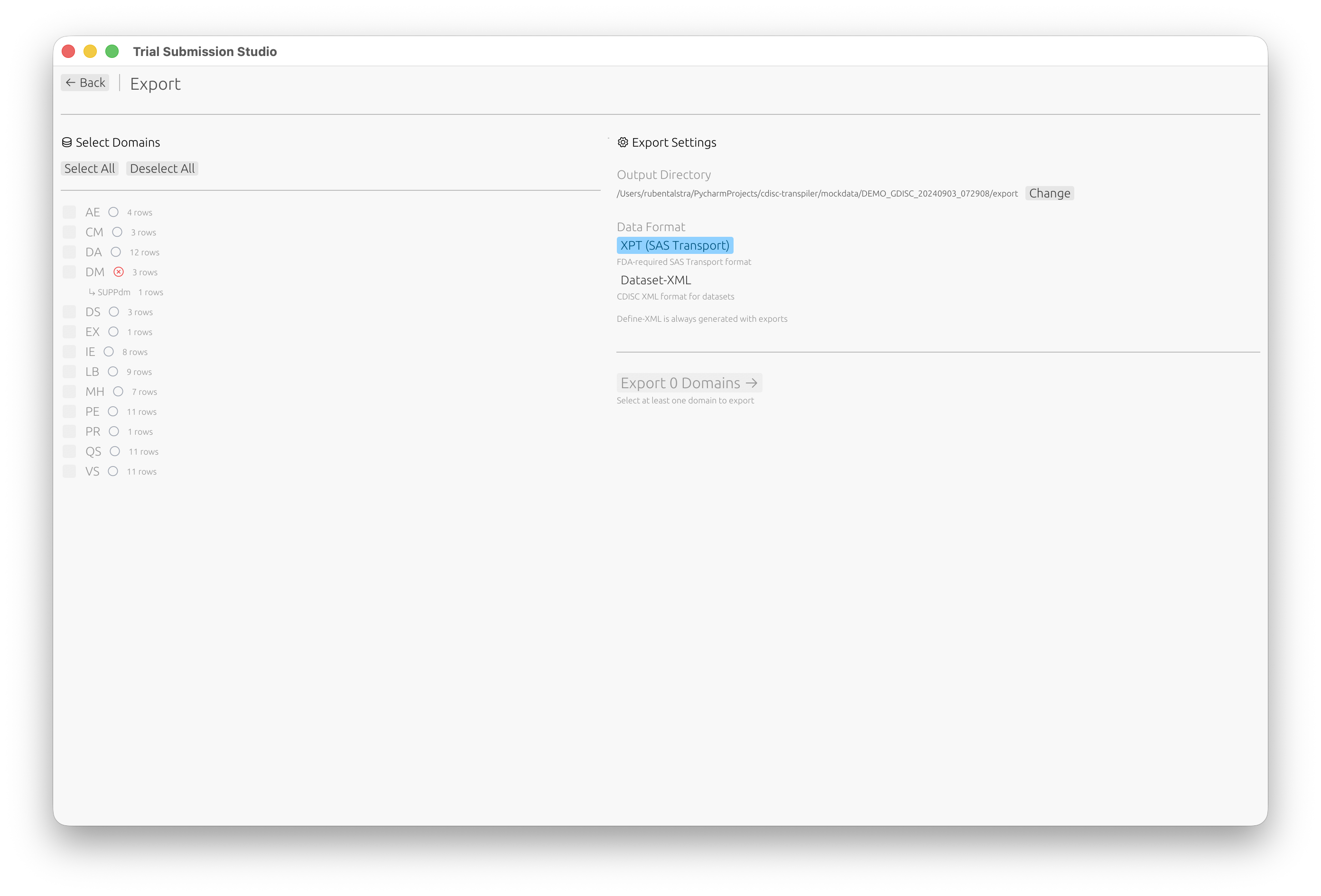

Step 6: Export

- Click Go to Export or navigate to the Export screen

- Select which domains to export

- Choose your output format:

- XPT (SAS Transport) (FDA standard)

- Dataset-XML (CDISC data exchange)

- Click Export

Next Steps

Now that you’ve completed the basic workflow:

- Interface Overview - Learn about all features

- Column Mapping - Advanced mapping techniques

- Validation - Understanding validation rules

- SDTM Standards - SDTM reference guide

System Requirements

Trial Submission Studio is designed to run on modern desktop systems with minimal resource requirements.

Supported Platforms

| Platform | Architecture | Minimum Version | Status |

|---|---|---|---|

| macOS | Apple Silicon (M1/M2/M3+) | macOS 11.0 (Big Sur) | Supported |

| macOS | Intel (x86_64) | macOS 10.15 (Catalina) | Supported |

| Windows | x86_64 (64-bit) | Windows 10 | Supported |

| Windows | ARM64 | Windows 11 | Supported |

| Linux | x86_64 (64-bit) | Ubuntu 20.04 or equivalent | Supported |

Hardware Requirements

| Component | Minimum | Recommended |

|---|---|---|

| RAM | 4 GB | 8 GB+ |

| Disk Space | 200 MB | 500 MB |

| Display | 1280x720 | 1920x1080+ |

Software Dependencies

Trial Submission Studio is a standalone application with no external dependencies:

- No SAS installation required

- No Java runtime required

- No internet connection required (works fully offline)

- All CDISC standards are embedded in the application

Performance Considerations

Large Datasets

Trial Submission Studio can handle datasets with:

- Hundreds of thousands of rows

- Hundreds of columns

For very large datasets (1M+ rows), consider:

- Ensuring adequate RAM (8GB+)

- Using SSD storage for faster I/O

- Processing data in batches if needed

Memory Usage

Memory usage scales with dataset size. Approximate guidelines:

- Small datasets (<10,000 rows): ~100 MB RAM

- Medium datasets (10,000-100,000 rows): ~500 MB RAM

- Large datasets (100,000+ rows): 1+ GB RAM

Troubleshooting

macOS Gatekeeper

On first launch, macOS may block the application. To resolve:

- Right-click the application

- Select “Open”

- Click “Open” in the dialog

Linux Permissions

Ensure the executable has run permissions:

chmod +x trial-submission-studio

Windows SmartScreen

If Windows SmartScreen blocks the application:

- Click “More info”

- Click “Run anyway”

Next Steps

- Installation - Download and install the application

- Quick Start - Get started in 5 minutes

Building from Source

For developers who want to compile Trial Submission Studio from source code.

Prerequisites

Required

- Rust 1.92+ - Install via rustup

- Git - For cloning the repository

Platform-Specific Dependencies

macOS

No additional dependencies required.

Linux (Ubuntu/Debian)

sudo apt-get install libgtk-3-dev libxdo-dev

Windows

No additional dependencies required.

Clone the Repository

git clone https://github.com/rubentalstra/trial-submission-studio.git

cd trial-submission-studio

Verify Rust Version

rustup show

Ensure you have Rust 1.92 or higher. To update:

rustup update stable

Build

Debug Build (faster compilation)

cargo build

Release Build (optimized, slower compilation)

cargo build --release

Run

Debug

cargo run --package tss-gui

Release

cargo run --release --package tss-gui

Or run the compiled binary directly:

./target/release/tss-gui # macOS/Linux

.\target\release\tss-gui.exe # Windows

Run Tests

# All tests

cargo test

# Specific crate

cargo test --package tss-submit

# With output

cargo test -- --nocapture

Run Lints

# Format check

cargo fmt --check

# Clippy lints

cargo clippy -- -D warnings

Project Structure

Trial Submission Studio is organized as a 6-crate Rust workspace:

trial-submission-studio/

├── crates/

│ ├── tss-gui/ # Desktop application (Iced 0.14.0)

│ ├── tss-submit/ # Mapping, normalization, validation, export

│ ├── tss-ingest/ # CSV loading

│ ├── tss-standards/ # CDISC standards loader

│ ├── tss-updater/ # Auto-update functionality

│ └── tss-updater-helper/ # macOS update helper

├── standards/ # Embedded CDISC standards

├── mockdata/ # Test datasets

└── docs/ # Documentation (this site)

Third-Party Licenses

When adding or updating dependencies, regenerate the licenses file:

# Install cargo-about (one-time)

cargo install cargo-about

# Generate licenses

cargo about generate about.hbs -o THIRD_PARTY_LICENSES.md

IDE Setup

RustRover / IntelliJ IDEA

- Open the project folder

- The Rust plugin will detect the workspace automatically

VS Code

- Install the

rust-analyzerextension - Open the project folder

Next Steps

- Contributing Guide - How to contribute

- Architecture Overview - Understand the codebase

Interface Overview

Trial Submission Studio features a clean, intuitive interface designed for clinical data programmers.

Welcome Screen

When you first launch the application, you’ll see the welcome screen where you can select your target CDISC standard and open a study folder:

Study Overview

After opening a study folder, Trial Submission Studio automatically discovers domains from your source data:

Main Window Layout

The application is organized into several key areas:

┌─────────────────────────────────────────────────────────────┐

│ Menu Bar │

├─────────────────────────────────────────────────────────────┤

│ Toolbar │

├──────────────────┬──────────────────────────────────────────┤

│ │ │

│ Navigation │ Main Content Area │

│ Panel │ │

│ │ - Data Preview │

│ - Import │ - Mapping Interface │

│ - Mapping │ - Validation Results │

│ - Validation │ - Export Options │

│ - Export │ │

│ │ │

├──────────────────┴──────────────────────────────────────────┤

│ Status Bar │

└─────────────────────────────────────────────────────────────┘

Menu Bar

File Menu

- Import CSV - Load source data

- Export - Save to XPT/XML formats

- Recent Files - Quick access to recent projects

- Exit - Close the application

Edit Menu

- Undo/Redo - Reverse or repeat actions

- Preferences - Application settings

Help Menu

- Documentation - Open this documentation

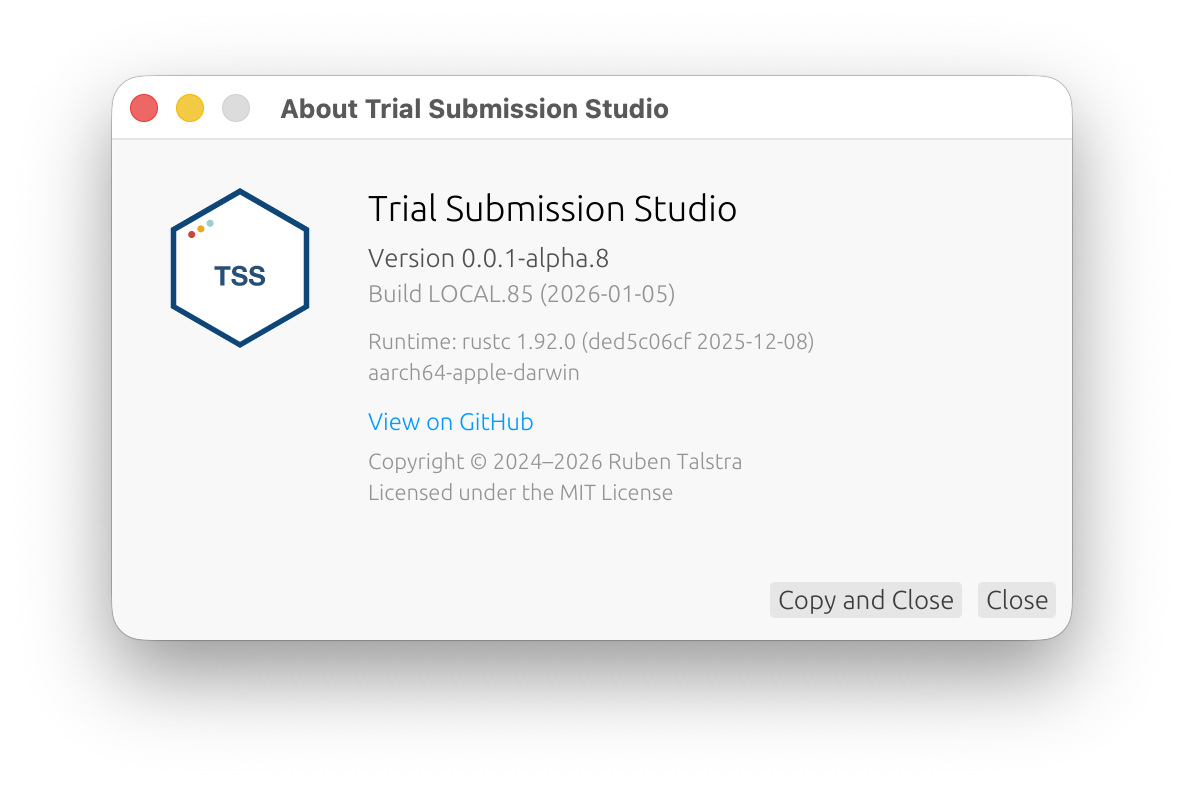

- About - Version and license information

- Third-Party Licenses - Dependency attributions

Toolbar

Quick access to common actions:

- Import - Load CSV file

- Validate - Run validation checks

- Export - Save output files

Navigation Panel

The left sidebar provides step-by-step workflow navigation:

- Import - Load and preview source data

- Domain - Select target SDTM domain

- Mapping - Map columns to variables

- Validation - Review validation results

- Export - Generate output files

Main Content Area

The central area displays context-sensitive content based on the current workflow step:

Import View

- File selection

- Data preview table

- Column type detection

- Schema information

Mapping View

- Source columns list

- Target variables list

- Mapping connections

- Match confidence scores

Validation View

- Validation rule results

- Error/warning/info messages

- Affected rows and columns

- Suggested fixes

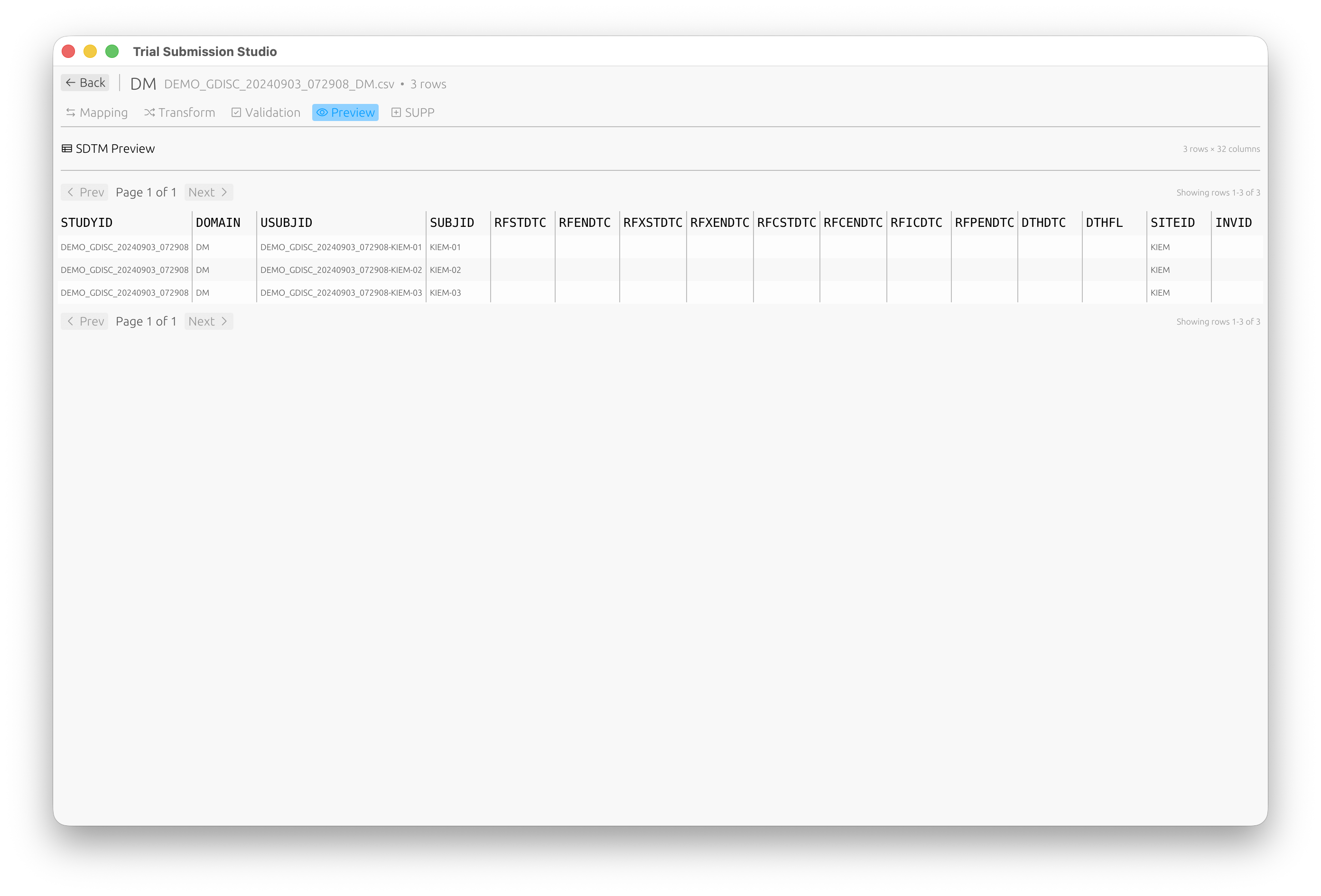

Preview View

Preview your SDTM-compliant data before export:

Export View

- Format selection

- Output options

- File destination

- Progress indicator

Status Bar

The bottom bar displays:

- Current file name

- Row/column counts

- Validation status

- Progress for long operations

Keyboard Shortcuts

| Action | macOS | Windows/Linux |

|---|---|---|

| Import | ⌘O | Ctrl+O |

| Export | ⌘E | Ctrl+E |

| Validate | ⌘R | Ctrl+R |

| Undo | ⌘Z | Ctrl+Z |

| Redo | ⌘⇧Z | Ctrl+Shift+Z |

| Preferences | ⌘, | Ctrl+, |

| Quit | ⌘Q | Alt+F4 |

Themes

Trial Submission Studio supports light and dark themes. Change via: Edit → Preferences → Appearance

Next Steps

- Importing Data - Learn about data import

- Column Mapping - Mapping interface guide

Importing Data

Trial Submission Studio accepts CSV files as input and automatically detects schema information.

Supported Input Format

Currently, Trial Submission Studio supports:

- CSV files (

.csv) - UTF-8 or ASCII encoding

- Comma-separated values

- Headers in first row

Import Methods

Drag and Drop

Simply drag a CSV file from your file manager and drop it onto the application window.

File Menu

- Click File → Import CSV

- Navigate to your file

- Click Open

Toolbar Button

Click the Import button in the toolbar.

Automatic Detection

When you import a file, Trial Submission Studio automatically:

Column Type Detection

Analyzes sample values to determine:

- Numeric - Integer or floating-point numbers

- Date/Time - Various date formats

- Text - Character strings

Domain Suggestion

Based on column names, suggests likely SDTM domains:

USUBJID,AGE,SEX→ Demographics (DM)AETERM,AESTDTC→ Adverse Events (AE)VSTESTCD,VSSTRESN→ Vital Signs (VS)

Date Format Detection

Automatically recognizes common date formats:

- ISO 8601:

2024-01-15 - US format:

01/15/2024 - EU format:

15-01-2024 - With time:

2024-01-15T09:30:00

Data Preview

After import, you’ll see:

Data Grid

- First 100 rows displayed

- Scroll to view more data

- Column headers with detected types

Summary Panel

- Total row count

- Total column count

- File size

- Encoding detected

Column Information

- Column name

- Detected type

- Sample values

- Null count

Handling Issues

Encoding Problems

If you see garbled characters:

- Ensure your file is UTF-8 encoded

- Re-save from your source application with UTF-8 encoding

Missing Headers

If your CSV lacks headers:

- Add a header row to your file

- Re-import

Large Files

For files with millions of rows:

- Import may take longer

- A progress indicator will show status

- Consider splitting into smaller files if needed

Best Practices

-

Clean your data before import

- Remove trailing whitespace

- Standardize date formats

- Check for encoding issues

-

Use descriptive column names

- Helps with automatic mapping suggestions

- Use SDTM-like naming when possible

-

Include all required data

- USUBJID for subject identification

- Domain-specific required variables

Next Steps

- Column Mapping - Map imported columns to SDTM variables

- Validation - Validate your data

Column Mapping

The mapping interface helps you connect your source CSV columns to SDTM variables.

Overview

Column mapping is a critical step that defines how your source data transforms into SDTM-compliant output.

flowchart LR

subgraph Source[Source CSV]

S1[SUBJ_ID]

S2[PATIENT_AGE]

S3[GENDER]

S4[VISIT_DATE]

end

subgraph Mapping[Fuzzy Matching]

M[Match<br/>Algorithm]

end

subgraph Target[SDTM Variables]

T1[USUBJID]

T2[AGE]

T3[SEX]

T4[RFSTDTC]

end

S1 --> M --> T1

S2 --> M --> T2

S3 --> M --> T3

S4 --> M --> T4

style M fill: #4a90d9, color: #fff

The Mapping Interface

┌─────────────────────────────────────────────────────────────┐

│ Source Columns │ Target Variables │

├─────────────────────────┼───────────────────────────────────┤

│ SUBJ_ID ────────│──▶ USUBJID │

│ PATIENT_AGE ────────│──▶ AGE │

│ GENDER ────────│──▶ SEX │

│ VISIT_DATE ────────│──▶ RFSTDTC │

│ RACE_DESC ────────│──▶ RACE │

│ [Unmapped] │ ETHNIC (Required) │

└─────────────────────────┴───────────────────────────────────┘

Automatic Mapping

Trial Submission Studio uses fuzzy matching to suggest mappings:

How It Works

- Analyzes source column names

- Compares against SDTM variable names

- Calculates similarity scores

- Suggests best matches

Match Confidence

- High (>80%) - Strong name similarity, auto-accepted

- Medium (50-80%) - Review recommended

- Low (<50%) - Manual mapping needed

Example Matches

| Source Column | Suggested Variable | Confidence |

|---|---|---|

SUBJECT_ID | USUBJID | 85% |

AGE | AGE | 100% |

GENDER | SEX | 75% |

VSTESTVAL | VSSTRESN | 70% |

Manual Mapping

To Map a Column

- Click on the source column

- Click on the target variable

- A connection line appears

To Unmap a Column

- Click on the connection line

- Or right-click and select “Remove Mapping”

To Change a Mapping

- Remove the existing mapping

- Create a new mapping

Required vs Optional Variables

Required Variables

Shown with a red indicator. Must be mapped for valid output:

STUDYID- Study identifierDOMAIN- Domain abbreviationUSUBJID- Unique subject identifier

Optional Variables

Shown without indicator. Map if data is available.

Expected Variables

Shown with yellow indicator. Expected for the domain but not strictly required.

Data Type Considerations

The mapping interface warns about type mismatches:

| Warning | Description |

|---|---|

| Type Mismatch | Source is text, target is numeric |

| Length Exceeded | Source values exceed SDTM length limits |

| Format Warning | Date format needs conversion |

Controlled Terminology

For variables with controlled terminology:

- The interface shows valid values

- Warns if source values don’t match

- Suggests value mappings

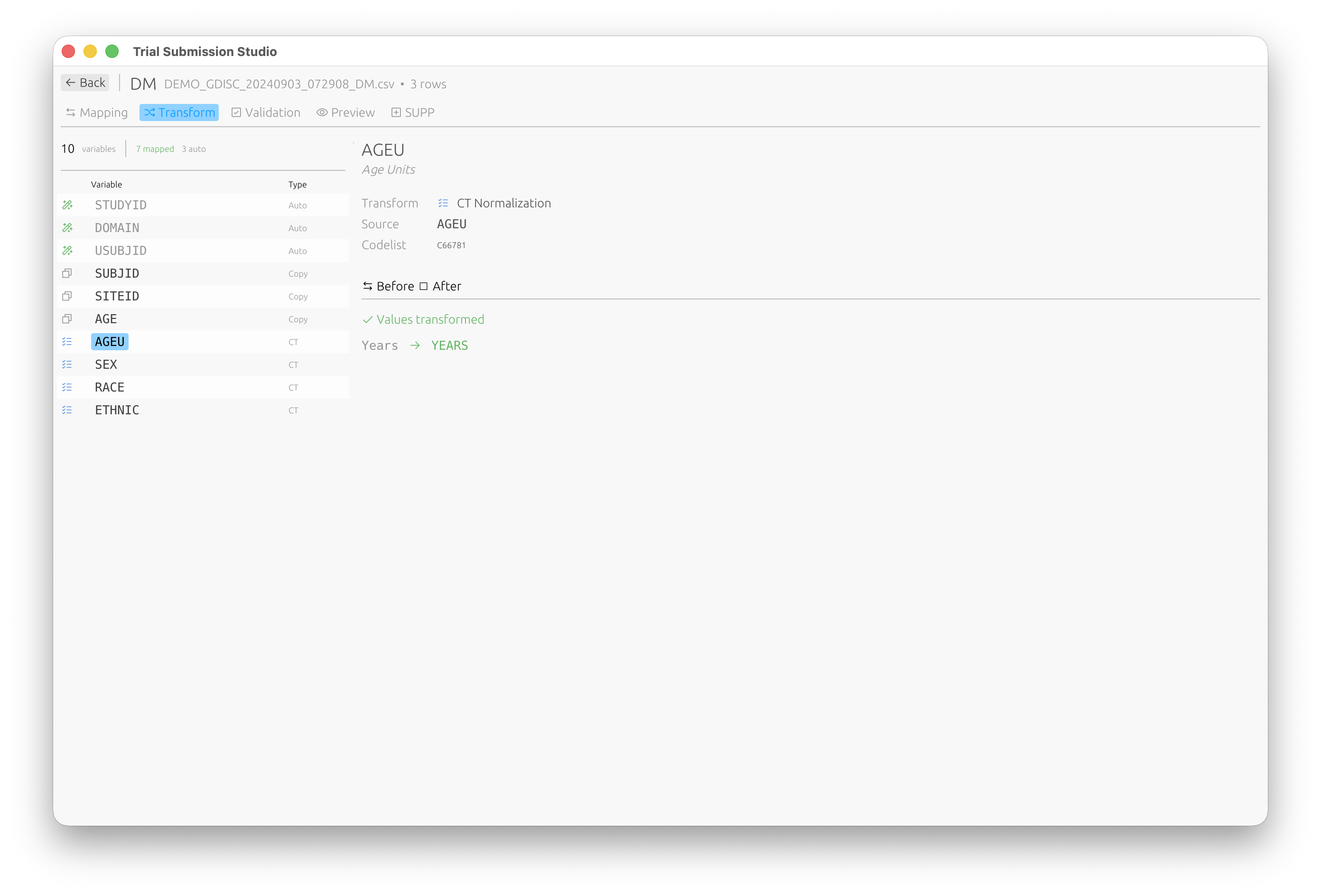

CT Normalization

The Transform tab allows you to normalize values to CDISC Controlled Terminology:

Values are automatically transformed to their standardized form (e.g., “Years” → “YEARS”).

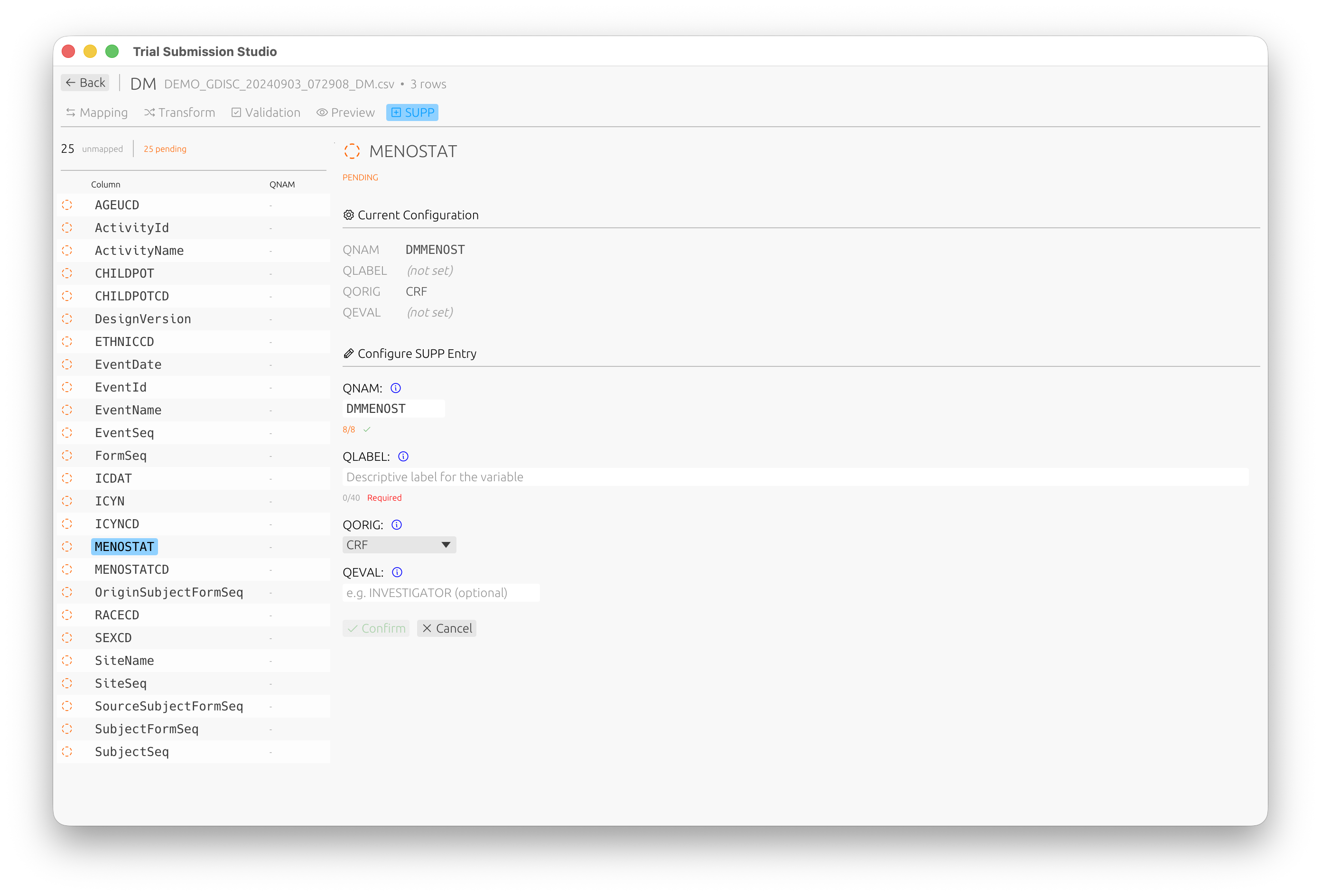

Supplemental Qualifiers (SUPP)

For non-standard variables that need to be captured as supplemental qualifiers, use the SUPP tab:

Configure QNAM, QLABEL, QORIG, and QEVAL for each supplemental qualifier variable.

Mapping Templates

Save a Template

- Complete your mappings

- File → Save Mapping Template

- Name your template

Load a Template

- Import your data

- File → Load Mapping Template

- Select the template

- Review and adjust as needed

Best Practices

- Review all automatic mappings - Don’t blindly accept

- Map required variables first - Ensure compliance

- Check controlled terminology - Validate allowed values

- Save templates - Reuse for similar datasets

Next Steps

- Validation - Validate your mappings

- SDTM Variables - Variable reference

Validation

Trial Submission Studio validates your data against CDISC standards before export.

Validation Overview

flowchart LR

subgraph Input

DATA[Mapped Data]

end

subgraph Checks

STRUCT[Structure<br/>Required variables]

CT[Terminology<br/>Codelist values]

CROSS[Cross-Domain<br/>Consistency]

end

subgraph Output

ERR[Errors]

WARN[Warnings]

INFO[Info]

end

DATA --> STRUCT --> CT --> CROSS

STRUCT --> ERR

CT --> WARN

CROSS --> INFO

style ERR fill: #f8d7da, stroke: #721c24

style WARN fill: #fff3cd, stroke: #856404

style INFO fill: #d1ecf1, stroke: #0c5460

Validation checks ensure your data:

- Conforms to SDTM structure

- Uses correct controlled terminology

- Meets FDA submission requirements

Running Validation

Automatic Validation

Validation runs automatically when you:

- Complete column mapping

- Make changes to mappings

- Prepare for export

Manual Validation

Click Validate in the toolbar or press Ctrl+R (⌘R on macOS).

Validation Results

Result Categories

| Category | Icon | Description |

|---|---|---|

| Error | Red | Must be fixed before export |

| Warning | Yellow | Should be reviewed |

| Info | Blue | Informational, no action required |

Results Panel

┌─────────────────────────────────────────────────────────────┐

│ Validation Results [✓] [⚠] [ℹ] │

├─────────────────────────────────────────────────────────────┤

│ ❌ SD0001: USUBJID is required but not mapped │

│ Rows affected: All │

│ Fix: Map a column to USUBJID │

├─────────────────────────────────────────────────────────────┤

│ ⚠️ CT0015: Value "M" not in SEX codelist │

│ Rows affected: 45, 67, 89 │

│ Expected: MALE, FEMALE, UNKNOWN │

├─────────────────────────────────────────────────────────────┤

│ ℹ️ INFO: 1250 rows will be exported │

└─────────────────────────────────────────────────────────────┘

Validation Rules

Structural Rules

| Rule ID | Description |

|---|---|

| SD0001 | Required variable missing |

| SD0002 | Invalid variable name |

| SD0003 | Variable length exceeded |

| SD0004 | Invalid data type |

Controlled Terminology Rules

| Rule ID | Description |

|---|---|

| CT0001 | Value not in codelist |

| CT0002 | Codelist not found |

| CT0003 | Invalid date format |

Cross-Domain Rules

| Rule ID | Description |

|---|---|

| XD0001 | USUBJID not consistent |

| XD0002 | Missing parent record |

| XD0003 | Duplicate keys |

Fixing Validation Errors

Mapping Errors

- Click on the error message

- The relevant mapping is highlighted

- Adjust the mapping or source data

Data Errors

- Note the affected rows

- Correct the source data

- Re-import and re-validate

Terminology Errors

- Review the expected values

- Map source values to controlled terms

- Or update source data to use standard terms

Controlled Terminology Validation

Supported Codelists

Trial Submission Studio includes embedded controlled terminology:

- CDISC CT 2025-09-26 (latest)

- CDISC CT 2025-03-28

- CDISC CT 2024-03-29

Codelist Validation

For variables like SEX, RACE, COUNTRY:

- Source values are checked against valid terms

- Invalid values are flagged

- Suggestions for correct values are provided

Validation Reports

Export Validation Report

- Complete validation

- File → Export Validation Report

- Choose format (PDF, HTML, CSV)

- Save the report

Report Contents

- Summary statistics

- All validation messages

- Affected data rows

- Recommendations

Best Practices

- Validate early and often - Fix issues as you go

- Address errors first - Then warnings

- Document exceptions - If warnings are intentional

- Keep validation reports - For audit trails

Next Steps

- Exporting Data - Export validated data

- Controlled Terminology - CT reference

Exporting Data

After mapping and validation, export your data to CDISC-compliant formats.

Export Formats

Trial Submission Studio supports multiple output formats:

| Format | Version | Description | Use Case |

|---|---|---|---|

| XPT | V5 | SAS Transport (FDA standard) | FDA submissions |

| XPT | V8 | Extended SAS Transport | Longer names/labels |

| Dataset-XML | 1.0 | CDISC XML format | Data exchange |

| Define-XML | 2.1 | Metadata documentation | Submission package |

XPT Export

XPT Version 5 (Default)

The FDA standard format with these constraints:

- Variable names: 8 characters max

- Labels: 40 characters max

- Compatible with SAS V5 Transport

XPT Version 8

Extended format supporting:

- Variable names: 32 characters

- Labels: 256 characters

- Note: Not all systems support V8

Export Steps

- Click Export in the toolbar

- Select XPT V5 or XPT V8

- Choose output location

- Click Save

XPT Options

| Option | Description |

|---|---|

| Include all variables | Export mapped and derived variables |

| Sort by keys | Order rows by key variables |

| Compress | Reduce file size |

Dataset-XML Export

CDISC ODM-based XML format for data exchange.

Features

- Human-readable format

- Full Unicode support

- Metadata included

- Schema validation

Export Steps

- Click Export

- Select Dataset-XML

- Configure options

- Click Save

Define-XML Export

Generate submission metadata documentation.

Define-XML 2.1

- Dataset definitions

- Variable metadata

- Controlled terminology

- Computational methods

- Value-level metadata

Export Steps

- Click Export

- Select Define-XML

- Review metadata

- Click Save

Batch Export

Export multiple domains at once:

- File → Batch Export

- Select domains to export

- Choose format(s)

- Set output directory

- Click Export All

Export Validation

Before export completes, the system verifies:

- All required variables are present

- Data types are correct

- Lengths don’t exceed limits

- Controlled terms are valid

Output Files

File Naming

Default naming convention:

{domain}.xpt- e.g.,dm.xpt,ae.xpt{domain}.xml- for Dataset-XMLdefine.xml- for Define-XML

Checksums

Each export generates:

- SHA256 checksum file (

.sha256) - Useful for submission verification

Quality Checks

Post-Export Verification

- Open the exported file in a viewer

- Verify row counts match

- Check variable order

- Review sample values

External Validation

Consider validating with:

- Pinnacle 21 Community

- SAS (if available)

- Other CDISC validators

Best Practices

- Validate before export - Fix all errors first

- Use XPT V5 for FDA - Standard format

- Generate checksums - For integrity verification

- Test with validators - Confirm compliance

- Keep source files - Maintain audit trail

Troubleshooting

Export Fails

| Issue | Solution |

|---|---|

| Validation errors | Fix errors before export |

| Disk full | Free up space |

| Permission denied | Check write permissions |

| File in use | Close file in other apps |

Output Issues

| Issue | Solution |

|---|---|

| Truncated values | Check length limits |

| Missing data | Verify mappings |

| Wrong encoding | Ensure UTF-8 source |

Next Steps

- Common Workflows - End-to-end examples

- XPT Format - XPT specification

- Define-XML - Define-XML guide

Common Workflows

Step-by-step guides for typical Trial Submission Studio use cases.

Workflow Overview

flowchart LR

subgraph "1. Import"

A[Load CSV]

end

subgraph "2. Configure"

B[Select Domain]

C[Map Columns]

end

subgraph "3. Quality"

D[Handle CT]

E[Validate]

end

subgraph "4. Output"

F[Export XPT]

end

A --> B --> C --> D --> E --> F

E -.->|Fix Issues| C

style A fill: #e8f4f8, stroke: #333

style F fill: #d4edda, stroke: #333

Workflow 1: Demographics (DM) Domain

Transform demographics source data to SDTM DM domain.

Source Data Example

SUBJECT_ID,AGE,SEX,RACE,ETHNIC,COUNTRY,SITE_ID

SUBJ001,45,Male,WHITE,NOT HISPANIC,USA,101

SUBJ002,38,Female,ASIAN,NOT HISPANIC,USA,102

SUBJ003,52,Male,BLACK,HISPANIC,USA,101

Steps

-

Import the CSV

- File → Import CSV

- Select your demographics file

-

Select DM Domain

- Click on “Domain Selection”

- Choose “DM - Demographics”

-

Map Columns

Source Target Notes SUBJECT_ID USUBJID Subject identifier AGE AGE Age in years SEX SEX Maps to controlled terminology RACE RACE Controlled terminology ETHNIC ETHNIC Controlled terminology COUNTRY COUNTRY ISO 3166 codes SITE_ID SITEID Site identifier -

Handle Controlled Terminology

- “Male” → “M” (or keep if using extensible CT)

- “Female” → “F”

- Review RACE and ETHNIC values

-

Validate

- Click Validate

- Address any errors

-

Export

- Export → XPT V5

- Save as

dm.xpt

Workflow 2: Adverse Events (AE) Domain

Transform adverse event data to SDTM AE domain.

Source Data Example

SUBJECT_ID,AE_TERM,START_DATE,END_DATE,SEVERITY,SERIOUS

SUBJ001,Headache,2024-01-15,2024-01-17,MILD,N

SUBJ001,Nausea,2024-02-01,,MODERATE,N

SUBJ002,Rash,2024-01-20,2024-01-25,SEVERE,Y

Steps

-

Import CSV

-

Select AE Domain

-

Map Columns

Source Target Notes SUBJECT_ID USUBJID AE_TERM AETERM Verbatim term START_DATE AESTDTC Start date END_DATE AEENDTC End date (can be blank) SEVERITY AESEV Controlled terminology SERIOUS AESER Y/N -

Derive Required Variables

- AESEQ (sequence number) - auto-generated

- AEDECOD (dictionary term) - if available

-

Validate and Export

Workflow 3: Vital Signs (VS) Domain

Transform vital signs measurements to SDTM VS domain.

Source Data Example

SUBJECT_ID,VISIT,TEST,RESULT,UNIT,DATE

SUBJ001,BASELINE,SYSBP,120,mmHg,2024-01-10

SUBJ001,BASELINE,DIABP,80,mmHg,2024-01-10

SUBJ001,WEEK 4,SYSBP,118,mmHg,2024-02-07

Steps

-

Import CSV

-

Select VS Domain

-

Map Columns

Source Target Notes SUBJECT_ID USUBJID VISIT VISIT Visit name TEST VSTESTCD Test code RESULT VSSTRESN Numeric result UNIT VSSTRESU Result unit DATE VSDTC Collection date -

Map Test Codes

- SYSBP → Systolic Blood Pressure

- DIABP → Diastolic Blood Pressure

-

Validate and Export

Workflow 4: Batch Processing

Process multiple domains from one source file.

Source Data

A comprehensive dataset with columns for multiple domains.

Steps

- Import the source file

- Process each domain

- Filter relevant columns

- Map to domain variables

- Validate

- Batch Export

- File → Batch Export

- Select all processed domains

- Export to output folder

Workflow 5: Re-processing with Template

Use a saved mapping template for similar data.

Steps

-

First Time Setup

- Import sample data

- Create mappings

- Save template: File → Save Mapping Template

-

Subsequent Processing

- Import new data (same structure)

- Load template: File → Load Mapping Template

- Review and adjust if needed

- Validate and export

Tips for All Workflows

Before You Start

- Review source data quality

- Identify required variables

- Prepare controlled terminology mappings

During Processing

- Validate after each major step

- Document any decisions

- Keep notes on exceptions

After Export

- Verify output files

- Run external validation

- Archive source and output files

Next Steps

- Troubleshooting - Common issues

- SDTM Domains - Domain reference

Troubleshooting

Common issues and their solutions when using Trial Submission Studio.

Import Issues

File Won’t Import

| Symptom | Cause | Solution |

|---|---|---|

| “Invalid file format” | Not a CSV file | Ensure file is CSV format |

| “Encoding error” | Non-UTF8 encoding | Re-save as UTF-8 |

| “No data found” | Empty file or wrong delimiter | Check file contents |

| “Parse error” | Malformed CSV | Fix CSV structure |

Data Appears Garbled

Cause: Encoding mismatch

Solution:

- Open the file in a text editor

- Save with UTF-8 encoding

- Re-import

Missing Columns

Cause: Header row issues

Solution:

- Verify first row contains headers

- Check for BOM (byte order mark) issues

- Remove hidden characters

Mapping Issues

No Suggested Mappings

Cause: Column names don’t match SDTM variables

Solution:

- Manually map columns

- Consider renaming source columns

- Create a mapping template for reuse

Wrong Automatic Mappings

Cause: Fuzzy matching misidentified variables

Solution:

- Review all automatic mappings

- Manually correct incorrect mappings

- Adjust match confidence threshold in settings

Can’t Map Required Variable

Cause: Source data missing required information

Solution:

- Add the missing data to source file

- Derive from other columns if possible

- Consult with data manager

Validation Issues

Too Many Errors

Cause: Data quality issues or incorrect mappings

Solution:

- Address errors in priority order

- Fix mapping issues first

- Clean source data if needed

- Re-validate after each fix

Controlled Terminology Errors

Cause: Values don’t match CDISC CT

Solution:

- Review expected values in the error message

- Map source values to standard terms

- Update source data if appropriate

Date Format Errors

Cause: Non-ISO date formats

Solution:

- Convert dates to ISO 8601 format (YYYY-MM-DD)

- Or use partial dates where appropriate (YYYY-MM, YYYY)

Export Issues

Export Fails

| Error | Cause | Solution |

|---|---|---|

| “Validation errors exist” | Unresolved errors | Fix all errors first |

| “Permission denied” | No write access | Check folder permissions |

| “Disk full” | Insufficient space | Free up disk space |

| “File in use” | File open elsewhere | Close file in other apps |

Truncated Data in XPT

Cause: Values exceed XPT limits

Solution:

- XPT V5: Max 200 chars per variable

- Check variable lengths before export

- Consider using XPT V8 for longer values

Missing Variables in Output

Cause: Variables not mapped or derived

Solution:

- Verify all required mappings

- Check if derived variables were created

- Review export settings

Performance Issues

Slow Import

Cause: Large file size

Solution:

- Allow time for large files

- Consider splitting into smaller files

- Close other applications

- Increase available RAM

Application Freezes

Cause: Processing large datasets

Solution:

- Wait for operation to complete

- Check progress indicator

- If unresponsive after 5+ minutes, restart

- Process smaller datasets

High Memory Usage

Cause: Large dataset in memory

Solution:

- Close unused files

- Process one domain at a time

- Restart application to free memory

Application Issues

Application Won’t Start

macOS:

# If blocked by Gatekeeper

xattr -d com.apple.quarantine /Applications/Trial\ Submission\ Studio.app

Linux:

# Ensure executable permission

chmod +x trial-submission-studio

Windows:

- Run as administrator

- Check antivirus isn’t blocking

Crashes on Startup

Solution:

- Delete configuration files:

- macOS:

~/Library/Application Support/trial-submission-studio/ - Windows:

%APPDATA%\trial-submission-studio\ - Linux:

~/.config/trial-submission-studio/

- macOS:

- Reinstall the application

Settings Not Saved

Cause: Permission issues

Solution:

- Ensure write access to config directory

- Run application with appropriate permissions

Getting Help

Collect Information

Before reporting an issue, gather:

- Application version (Help → About)

- Operating system and version

- Steps to reproduce

- Error messages (screenshots)

- Sample data (anonymized)

Report an Issue

- Check existing issues

- Create a new issue

- Include collected information

Community Support

Quick Reference

Keyboard Shortcuts for Recovery

| Action | Windows/Linux | macOS |

|---|---|---|

| Force quit | Alt+F4 | ⌘Q |

| Cancel operation | Esc | Esc |

| Undo | Ctrl+Z | ⌘Z |

Log Files

Application logs are located at:

- macOS:

~/Library/Logs/trial-submission-studio/ - Windows:

%LOCALAPPDATA%\trial-submission-studio\logs\ - Linux:

~/.local/share/trial-submission-studio/logs/

Include relevant log excerpts when reporting issues.

CDISC Standards Overview

Trial Submission Studio supports CDISC (Clinical Data Interchange Standards Consortium) standards for regulatory submissions.

What is CDISC?

CDISC develops global data standards that streamline clinical research and enable connections to healthcare. These standards are required by regulatory agencies including the FDA and PMDA.

Standards Hierarchy

flowchart TD

CDISC[CDISC Standards]

CDISC --> SDTM[SDTM]

CDISC --> ADAM[ADaM]

CDISC --> SEND[SEND]

CDISC --> CT[Controlled Terminology]

SDTM --> IG34[IG v3.4]

ADAM --> IG13[IG v1.3]

SEND --> IG311[IG v3.1.1]

style CDISC fill:#4a90d9,color:#fff

style SDTM fill:#50c878,color:#fff

style ADAM fill:#f5a623,color:#fff

style SEND fill:#9b59b6,color:#fff

Supported Standards

Currently Implemented

| Standard | Version | Status |

|---|---|---|

| SDTM-IG | 3.4 | Supported |

| Controlled Terminology | 2024-2025 | Supported |

Planned Support

| Standard | Version | Status |

|---|---|---|

| ADaM-IG | 1.3 | Planned |

| SEND-IG | 3.1.1 | Planned |

SDTM (Study Data Tabulation Model)

SDTM is the standard structure for submitting study data to regulatory authorities.

Key Concepts

- Domains: Logical groupings of data (e.g., Demographics, Adverse Events)

- Variables: Individual data elements within domains

- Controlled Terminology: Standardized values for specific variables

Learn More

Controlled Terminology

CDISC Controlled Terminology (CT) provides standardized values for SDTM variables.

Embedded Versions

Trial Submission Studio includes the following CT packages:

- CDISC CT 2025-09-26 (latest)

- CDISC CT 2025-03-28

- CDISC CT 2024-03-29

Learn More

ADaM (Analysis Data Model)

ADaM is the standard for analysis-ready datasets derived from SDTM.

Note

ADaM support is planned for a future release.

SEND (Standard for Exchange of Nonclinical Data)

SEND is SDTM for nonclinical (animal) studies.

Note

SEND support is planned for a future release.

FDA Requirements

Electronic Submissions

The FDA requires CDISC standards for:

- New Drug Applications (NDA)

- Biologics License Applications (BLA)

- Abbreviated New Drug Applications (ANDA)

Study Data Technical Conformance Guide

Trial Submission Studio aligns with FDA’s Study Data Technical Conformance Guide requirements:

- XPT V5 format

- Define-XML 2.1

- Controlled Terminology validation

Resources

Official CDISC Resources

FDA Resources

Next Steps

- SDTM Introduction - Dive into SDTM

- Controlled Terminology - CT reference

SDTM Introduction

The Study Data Tabulation Model (SDTM) is the standard for organizing and formatting human clinical trial data for submission to regulatory authorities.

Purpose

SDTM provides:

- Consistent structure for clinical trial data

- Standardized naming conventions

- Regulatory compliance with FDA requirements

- Interoperability between systems and organizations

Key Concepts

Domains

SDTM organizes data into domains - logical groupings of related observations:

| Category | Examples |

|---|---|

| Special Purpose | DM (Demographics), CO (Comments), SE (Subject Elements), SV (Subject Visits) |

| Interventions | CM (Concomitant Meds), EX (Exposure), SU (Substance Use) |

| Events | AE (Adverse Events), DS (Disposition), MH (Medical History) |

| Findings | LB (Labs), VS (Vital Signs), EG (ECG), PE (Physical Exam) |

Variables

Each domain contains variables - individual data elements:

| Type | Description | Examples |

|---|---|---|

| Identifier | Subject/study identification | STUDYID, USUBJID, DOMAIN |

| Topic | Focus of the observation | AETERM, VSTEST, LBTEST |

| Timing | When observation occurred | AESTDTC, VSDTC, VISITNUM |

| Qualifier | Additional context | AESEV, VSPOS, LBORRES |

Controlled Terminology

Many variables require values from controlled terminology (CT):

- Standardized value lists

- Ensures consistency across studies

- Required for regulatory submissions

SDTM Structure

flowchart TB

subgraph "SDTM Domain Classes"

direction TB

SP[Special Purpose<br/>DM, CO, SE, SV]

INT[Interventions<br/>CM, EX, SU]

EVT[Events<br/>AE, DS, MH]

FIND[Findings<br/>LB, VS, EG, PE]

end

subgraph "Variable Types"

ID[Identifiers<br/>STUDYID, USUBJID]

TOPIC[Topic Variables<br/>--TERM, --TEST]

TIMING[Timing Variables<br/>--STDTC, --ENDTC]

QUAL[Qualifiers<br/>--SEV, --RES]

end

SP --> ID

INT --> ID

EVT --> ID

FIND --> ID

ID --> TOPIC

TOPIC --> TIMING

TIMING --> QUAL

style SP fill: #4a90d9, color: #fff

style INT fill: #50c878, color: #fff

style EVT fill: #f5a623, color: #fff

style FIND fill: #9b59b6, color: #fff

General Observation Classes

- Interventions: Treatments applied to subjects

- Events: Occurrences during study participation

- Findings: Observations and test results

Variable Roles

| Role | Purpose | Example |

|---|---|---|

| Identifier | Link records across domains | USUBJID |

| Topic | Describe the observation | AETERM |

| Timing | Capture when | AESTDTC |

| Qualifier | Provide context | AESEV |

| Rule | Link to analysis rules | (via Define-XML) |

Working with SDTM in Trial Submission Studio

Import Flow

- Load source CSV data

- Select target SDTM domain

- Map source columns to SDTM variables

- Handle controlled terminology

- Validate against SDTM rules

- Export to XPT format

Variable Requirements

- Required: Must be present and populated

- Expected: Should be present if applicable

- Permissible: Allowed but not required

Best Practices

- Map identifiers first: STUDYID, DOMAIN, USUBJID

- Use controlled terminology: For variables requiring CT

- Follow naming conventions: Variable names, labels

- Validate early: Catch issues before export

SDTM Versions

Trial Submission Studio currently supports:

- SDTM-IG 3.4 (current FDA standard)

Version History

| Version | Release | Notes |

|---|---|---|

| 3.4 | 2021 | Current FDA standard |

| 3.3 | 2018 | |

| 3.2 | 2013 | |

| 3.1.2 | 2008 |

Next Steps

- SDTM Domains - Domain reference

- SDTM Variables - Variable details

- Validation Rules - Compliance checking

- Controlled Terminology - CT reference

SDTM Domains

SDTM organizes clinical trial data into domains based on the type of observation.

Domain Classification

flowchart TD

subgraph "SDTM Domains"

direction TB

SPE[Special Purpose]

INT[Interventions]

EVT[Events]

FND[Findings]

end

SPE --> DM[DM - Demographics]

SPE --> TA[TA - Trial Arms]

SPE --> TS[TS - Trial Summary]

INT --> CM[CM - Medications]

INT --> EX[EX - Exposure]

INT --> PR[PR - Procedures]

EVT --> AE[AE - Adverse Events]

EVT --> MH[MH - Medical History]

EVT --> DS[DS - Disposition]

FND --> LB[LB - Lab Results]

FND --> VS[VS - Vital Signs]

FND --> EG[EG - ECG Results]

style SPE fill:#4a90d9,color:#fff

style INT fill:#50c878,color:#fff

style EVT fill:#f5a623,color:#fff

style FND fill:#9b59b6,color:#fff

Domain Categories

Special Purpose Domains

Core structural domains required for all submissions.

| Domain | Name | Description |

|---|---|---|

| DM | Demographics | Subject demographic information |

| CO | Comments | Free-text comments |

| SE | Subject Elements | Subject milestones |

| SV | Subject Visits | Visits for each subject |

| TA | Trial Arms | Planned study arms |

| TD | Trial Disease | Disease descriptions |

| TE | Trial Elements | Planned protocol elements |

| TI | Trial Inclusion/Exclusion | Eligibility criteria |

| TS | Trial Summary | Study-level parameters |

| TV | Trial Visits | Planned visits |

Interventions Domains

Treatments and substances given to or used by subjects.

| Domain | Name | Description |

|---|---|---|

| CM | Concomitant Medications | Non-study medications |

| EC | Exposure as Collected | Exposure data as collected |

| EX | Exposure | Study treatment exposure |

| PR | Procedures | Non-study procedures |

| SU | Substance Use | Tobacco, alcohol, etc. |

Events Domains

Discrete occurrences during study participation.

| Domain | Name | Description |

|---|---|---|

| AE | Adverse Events | All adverse events |

| CE | Clinical Events | Non-adverse clinical events |

| DS | Disposition | Subject status at milestones |

| DV | Protocol Deviations | Protocol violations |

| HO | Healthcare Encounters | Hospitalizations, ER visits |

| MH | Medical History | Prior conditions |

Findings Domains

Observations and measurements.

| Domain | Name | Description |

|---|---|---|

| DA | Drug Accountability | Drug dispensing/return |

| DD | Death Details | Cause of death details |

| EG | ECG Results | Electrocardiogram data |

| FT | Functional Tests | Functional assessments |

| IE | Inclusion/Exclusion | Subject eligibility |

| IS | Immunogenicity Specimen | Sample assessments |

| LB | Lab Results | Laboratory tests |

| MB | Microbiology Specimen | Microbiology samples |

| MI | Microscopic Findings | Histopathology |

| MK | Musculoskeletal | Musculoskeletal findings |

| MO | Morphology | Imaging morphology |

| MS | Microbiology Susceptibility | Antibiotic susceptibility |

| NV | Nervous System | Neurological findings |

| OE | Ophthalmology | Eye exam results |

| PC | Pharmacokinetics Concentrations | Drug concentrations |

| PE | Physical Exam | Physical examination |

| PP | PK Parameters | Pharmacokinetic parameters |

| QS | Questionnaires | PRO/questionnaire data |

| RE | Respiratory | Pulmonary function |

| RP | Reproductive | Reproductive findings |

| RS | Disease Response | Tumor response |

| SC | Subject Characteristics | Additional demographics |

| SS | Subject Status | Subject enrollment status |

| TR | Tumor/Lesion Results | Tumor measurements |

| TU | Tumor/Lesion Identification | Tumor identification |

| UR | Urinary System | Urological findings |

| VS | Vital Signs | Vital sign measurements |

Common Domain Details

DM - Demographics

Required for all studies. Contains one record per subject.

Key Variables:

- USUBJID (Unique Subject ID)

- AGE, AGEU (Age and units)

- SEX, RACE, ETHNIC

- ARM, ARMCD (Study arm)

- RFSTDTC, RFENDTC (Reference dates)

- COUNTRY, SITEID

AE - Adverse Events

Captures all adverse events during the study.

Key Variables:

- AETERM (Verbatim term)

- AEDECOD (Dictionary-coded term)

- AESTDTC, AEENDTC (Start/end dates)

- AESEV (Severity)

- AESER (Serious)

- AEREL (Relationship to treatment)

- AEOUT (Outcome)

VS - Vital Signs

Captures vital sign measurements.

Key Variables:

- VSTESTCD, VSTEST (Test code/name)

- VSORRES, VSSTRESC, VSSTRESN (Results)

- VSORRESU, VSSTRESU (Units)

- VSPOS (Position)

- VSDTC (Date/time)

- VISITNUM, VISIT

LB - Laboratory Results

Captures laboratory test results.

Key Variables:

- LBTESTCD, LBTEST (Test code/name)

- LBORRES, LBSTRESC, LBSTRESN (Results)

- LBORRESU, LBSTRESU (Units)

- LBSPEC (Specimen type)

- LBDTC (Date/time)

- LBNRIND (Reference range indicator)

Custom Domains

For data not fitting standard domains, create custom domains:

- Two-letter code starting with X, Y, or Z

- Follow general observation class rules

- Document in Define-XML

Next Steps

- SDTM Variables - Variable reference

- Validation Rules - Domain validation

SDTM Variables

Variables are the individual data elements within SDTM domains.

Variable Categories

Identifier Variables

Identify the study, subject, and domain.

| Variable | Label | Description |

|---|---|---|

| STUDYID | Study Identifier | Unique study ID |

| DOMAIN | Domain Abbreviation | Two-letter domain code |

| USUBJID | Unique Subject ID | Unique across all studies |

| SUBJID | Subject ID | Subject ID within study |

| SITEID | Study Site Identifier | Site number |

Topic Variables

Describe what was observed.

| Domain | Variable | Description |

|---|---|---|

| AE | AETERM | Adverse event term |

| CM | CMTRT | Medication name |

| LB | LBTEST | Lab test name |

| VS | VSTEST | Vital sign test |

Timing Variables

Capture when observations occurred.

| Variable | Label | Description |

|---|---|---|

| –DTC | Date/Time | ISO 8601 date/time |

| –STDTC | Start Date/Time | Start of observation |

| –ENDTC | End Date/Time | End of observation |

| –DY | Study Day | Study day number |

| VISITNUM | Visit Number | Numeric visit identifier |

| VISIT | Visit Name | Visit label |

Qualifier Variables

Provide additional context.

| Type | Examples | Description |

|---|---|---|

| Grouping | –CAT, –SCAT | Category, subcategory |

| Result | –ORRES, –STRESC | Original/standard result |

| Record | –SEQ, –GRPID | Sequence, grouping |

| Synonym | –DECOD, –MODIFY | Coded/modified terms |

Variable Naming Conventions

Prefix Pattern

Most variables use a domain-specific prefix:

AE+TERM=AETERMVS+TESTCD=VSTESTCDLB+ORRES=LBORRES

Common Suffixes

| Suffix | Meaning | Example |

|---|---|---|

--TESTCD | Test Code | VSTESTCD, LBTESTCD |

--TEST | Test Name | VSTEST, LBTEST |

--ORRES | Original Result | VSORRES, LBORRES |

--ORRESU | Original Units | VSORRESU, LBORRESU |

--STRESC | Standardized Result (Char) | VSSTRESC |

--STRESN | Standardized Result (Num) | VSSTRESN |

--STRESU | Standardized Units | VSSTRESU |

--STAT | Status | VSSTAT (NOT DONE) |

--REASND | Reason Not Done | VSREASND |

--LOC | Location | VSLOC |

--DTC | Date/Time | VSDTC, AESTDTC |

Data Types

Character Variables

- Text values

- Max length: 200 characters (XPT V5)

- Example: AETERM, VSTEST

Numeric Variables

- Integer or floating-point

- Example: AGE, VSSTRESN, LBSTRESN

Date/Time Variables

ISO 8601 format:

- Full:

2024-01-15T09:30:00 - Date only:

2024-01-15 - Partial:

2024-01,2024

Variable Requirements

Required Variables

Must be present and populated for every record.

| Domain | Required Variables |

|---|---|

| All | STUDYID, DOMAIN, USUBJID |

| DM | RFSTDTC, RFENDTC, SITEID, ARM, ARMCD |

| AE | AETERM, AEDECOD, AESTDTC |

| VS | VSTESTCD, VSTEST, VSORRES, VSDTC |

Expected Variables

Should be present when applicable.

| Domain | Expected Variables |

|---|---|

| AE | AEENDTC, AESEV, AESER, AEREL |

| VS | VSSTRESN, VSSTRESU, VISITNUM |

Permissible Variables

Can be included if relevant data exists.

Controlled Terminology

Variables requiring controlled terminology:

| Variable | Codelist |

|---|---|

| SEX | Sex |

| RACE | Race |

| ETHNIC | Ethnicity |

| COUNTRY | Country |

| AESEV | Severity |

| AESER | No Yes Response |

| VSTESTCD | Vital Signs Test Code |

| LBTESTCD | Lab Test Code |

Variable Metadata

Label

40 characters max (XPT V5):

- Descriptive text

- Example: “Adverse Event Reported Term”

Length

Define appropriate length for each variable:

- Consider actual data values

- XPT V5 max: 200 characters

Order

Maintain consistent variable ordering:

- Identifier variables

- Topic variables

- Qualifier variables

- Timing variables

Next Steps

- Validation Rules - Variable validation

- Controlled Terminology - CT values

SDTM Validation Rules

Trial Submission Studio validates data against SDTM implementation guide rules.

Validation Categories

Structural Validation

Checks data structure and format.

| Rule ID | Description | Severity |

|---|---|---|

| SD0001 | Required variable missing | Error |

| SD0002 | Invalid variable name | Error |

| SD0003 | Variable length exceeded | Error |

| SD0004 | Invalid data type | Error |

| SD0005 | Duplicate records | Warning |

| SD0006 | Invalid domain code | Error |

Content Validation

Checks data values and relationships.

| Rule ID | Description | Severity |

|---|---|---|

| CT0001 | Value not in controlled terminology | Error |

| CT0002 | Invalid date format | Error |

| CT0003 | Date out of valid range | Warning |

| CT0004 | Numeric value out of range | Warning |

| CT0005 | Missing required value | Error |

Cross-Record Validation

Checks relationships between records.

| Rule ID | Description | Severity |

|---|---|---|

| XR0001 | USUBJID not in DM | Error |

| XR0002 | Duplicate key values | Error |

| XR0003 | Missing parent record | Warning |

| XR0004 | Inconsistent dates across domains | Warning |

Common Validation Rules

Identifier Rules

STUDYID

- Must be present in all records

- Must be consistent across domains

- Cannot be null or empty

USUBJID

- Must be present in all records

- Must exist in DM domain

- Must be unique per subject

DOMAIN

- Must match the domain abbreviation

- Must be uppercase

- Must be 2 characters

Date/Time Rules

–DTC Variables

- Must follow ISO 8601 format

- Supported formats:

YYYY-MM-DDTHH:MM:SSYYYY-MM-DDYYYY-MMYYYY

Date Ranges

- End date cannot precede start date

- Study dates should be within study period

Controlled Terminology Rules

SEX

Valid values:

M(Male)F(Female)U(Unknown)UNDIFFERENTIATED

AESEV

Valid values:

MILDMODERATESEVERE

AESER

Valid values:

Y(Yes)N(No)

Validation Report

Error Summary

┌─────────────────────────────────────────────────────────────┐

│ Validation Summary │

├─────────────────────────────────────────────────────────────┤

│ Errors: 5 │

│ Warnings: 12 │

│ Info: 3 │

├─────────────────────────────────────────────────────────────┤

│ Domain: DM │

│ - 2 Errors │

│ - 3 Warnings │

│ │

│ Domain: AE │

│ - 3 Errors │

│ - 9 Warnings │

└─────────────────────────────────────────────────────────────┘

Error Details

Each error includes:

- Rule ID: Unique identifier

- Severity: Error/Warning/Info

- Description: What’s wrong

- Location: Affected rows/columns

- Suggestion: How to fix

Fixing Validation Issues

Mapping Issues

- Verify correct source column is mapped

- Check data type compatibility

- Ensure all required variables are mapped

Data Issues

- Review affected rows

- Correct values in source data

- Re-import and re-validate

Terminology Issues

- Check expected values in codelist

- Map source values to standard terms

- Use value-level mapping if needed

Custom Validation

Severity Overrides

Some warnings can be suppressed if intentional:

- Review the warning

- Document the reason

- Mark as reviewed (if applicable)

Adding Context

For validation reports:

- Add comments explaining exceptions

- Document data collection differences

- Note protocol-specific variations

Best Practices

-

Validate incrementally

- After initial mapping

- After each significant change

- Before final export

-

Address errors first

- Errors block export

- Warnings should be reviewed

- Info messages are FYI

-

Document exceptions

- Why a warning is acceptable

- Protocol-specific reasons

- Historical data limitations

-

Review validation reports

- Keep for audit trail

- Share with data management

- Include in submission package

Next Steps

- Controlled Terminology - Valid values

- Exporting Data - Export after validation

Controlled Terminology

CDISC Controlled Terminology (CT) provides standardized values for SDTM variables.

Overview

Controlled Terminology ensures:

- Consistency across studies and organizations

- Interoperability between systems

- Regulatory compliance with FDA requirements

Embedded CT Packages

Trial Submission Studio includes the following CT versions:

| Version | Release Date | Status |

|---|---|---|

| 2024-12-20 | December 2024 | Current |

| 2024-09-27 | September 2024 | Supported |

| 2024-06-28 | June 2024 | Supported |

Common Codelists

SEX (C66731)

| Code | Decoded Value |

|---|---|

| M | MALE |

| F | FEMALE |

| U | UNKNOWN |

| UNDIFFERENTIATED | UNDIFFERENTIATED |

RACE (C74457)

| Decoded Value |

|---|

| AMERICAN INDIAN OR ALASKA NATIVE |

| ASIAN |

| BLACK OR AFRICAN AMERICAN |

| NATIVE HAWAIIAN OR OTHER PACIFIC ISLANDER |

| WHITE |

| MULTIPLE |

| NOT REPORTED |

| UNKNOWN |

ETHNIC (C66790)

| Decoded Value |

|---|

| HISPANIC OR LATINO |

| NOT HISPANIC OR LATINO |

| NOT REPORTED |

| UNKNOWN |

COUNTRY (C66729)

ISO 3166-1 alpha-3 country codes:

- USA, CAN, GBR, DEU, FRA, JPN, etc.

AESEV (C66769) - Severity

| Decoded Value |

|---|

| MILD |

| MODERATE |

| SEVERE |

AESER (C66742) - Serious

| Code | Decoded Value |

|---|---|

| Y | Y |

| N | N |

NY (C66742) - No Yes Response

| Code | Decoded Value |

|---|---|

| Y | Y |

| N | N |

VSTESTCD (C66741) - Vital Signs Test Codes

| Code | Decoded Value |

|---|---|

| BMI | Body Mass Index |

| DIABP | Diastolic Blood Pressure |

| HEIGHT | Height |

| HR | Heart Rate |

| PULSE | Pulse Rate |

| RESP | Respiratory Rate |

| SYSBP | Systolic Blood Pressure |

| TEMP | Temperature |

| WEIGHT | Weight |

LBTESTCD - Lab Test Codes

Common examples:

| Code | Description |

|---|---|

| ALB | Albumin |

| ALT | Alanine Aminotransferase |

| AST | Aspartate Aminotransferase |

| BILI | Bilirubin |

| BUN | Blood Urea Nitrogen |

| CREAT | Creatinine |

| GLUC | Glucose |

| HGB | Hemoglobin |

| PLAT | Platelet Count |

| WBC | White Blood Cell Count |

Extensible vs Non-Extensible

Non-Extensible Codelists

Values must exactly match the codelist:

- SEX

- COUNTRY

- Unit codelists

Extensible Codelists

Additional values allowed with sponsor definition:

- RACE (can add study-specific values)

- Some test codes

Using CT in Trial Submission Studio

Automatic Validation

When you map variables with controlled terminology:

- Values are checked against the codelist

- Non-matching values are flagged

- Suggestions are provided

Value Mapping

For source values not in CT format:

- Create value-level mappings

- Map “Male” → “M”, “Female” → “F”

- Apply consistently

CT Version Selection

- Go to Settings → Controlled Terminology

- Select the appropriate CT version

- Validation uses selected version

Handling CT Errors

Value Not in Codelist

Error: “Value ‘XYZ’ not found in codelist”

Solutions:

- Check spelling/case

- Find the correct CT value

- Map source value to CT value

- For extensible codelists, document new value

Common Mappings

| Source Value | CT Value |

|---|---|

| Male | M |

| Female | F |

| Yes | Y |

| No | N |

| Caucasian | WHITE |

| African American | BLACK OR AFRICAN AMERICAN |

Updating CT

New CT versions are released quarterly by CDISC. To use newer versions:

- Check for Trial Submission Studio updates

- New CT is included in app updates

- Select version in settings

Resources

Official References

Next Steps

- SDTM Variables - Variables requiring CT

- Validation - CT validation in practice

ADaM (Preview)

The Analysis Data Model (ADaM) defines standards for analysis-ready datasets.

Note

ADaM support is planned for a future release of Trial Submission Studio.

What is ADaM?

ADaM (Analysis Data Model) provides:

- Standards for analysis datasets

- Derived from SDTM data

- Ready for statistical analysis

- Required for FDA submissions

ADaM vs SDTM

| Aspect | SDTM | ADaM |

|---|---|---|

| Purpose | Data tabulation | Data analysis |

| Timing | Raw data collection | Derived for analysis |

| Structure | Observation-based | Analysis-ready |

| Audience | Data managers | Statisticians |

ADaM Dataset Types

ADSL - Subject-Level Analysis Dataset

One record per subject containing:

- Demographics

- Treatment information

- Key baseline characteristics

- Analysis flags

BDS - Basic Data Structure

Vertical structure for:

- Laboratory data (ADLB)

- Vital signs (ADVS)

- Efficacy parameters

OCCDS - Occurrence Data Structure

For event data:

- Adverse events (ADAE)

- Concomitant medications (ADCM)

Other Structures

- Time-to-Event (ADTTE)

- Medical History (ADMH)

Planned Features

When ADaM support is added, Trial Submission Studio will provide:

ADaM Generation

- Derive ADSL from DM and other SDTM domains

- Create BDS datasets from SDTM findings

- Generate OCCDS from events domains

ADaM Validation

- Check ADaM IG compliance

- Validate traceability to SDTM

- Verify required variables

ADaM Export

- Export to XPT format

- Generate Define-XML for ADaM

- Include in submission package

Current Workarounds

Until ADaM support is available:

-

Export SDTM first

- Use Trial Submission Studio for SDTM

- Generate XPT files

-

Derive ADaM externally

- Use SAS or R

- Apply ADaM derivation rules

- Generate analysis datasets

-

Validate separately

- Use external validation tools

- Check ADaM compliance

Timeline

ADaM support is on our roadmap. Priority features:

- ADSL generation

- BDS for VS and LB

- OCCDS for AE

Resources

CDISC ADaM Resources

Stay Updated

- Check the Roadmap for updates

- Watch the GitHub repository for releases

SEND (Preview)

The Standard for Exchange of Nonclinical Data (SEND) extends SDTM for animal studies.

Note

SEND support is planned for a future release of Trial Submission Studio.

What is SEND?

SEND (Standard for Exchange of Nonclinical Data) provides:

- Standardized format for nonclinical (animal) study data

- Based on SDTM structure

- Required for FDA nonclinical submissions

- Supports toxicology and pharmacology studies

SEND vs SDTM

| Aspect | SDTM | SEND |

|---|---|---|

| Subjects | Human | Animal |

| Studies | Clinical trials | Nonclinical studies |

| Domains | Clinical domains | Nonclinical domains |

| Requirements | NDA, BLA | IND, NDA (nonclinical) |

SEND Domains

Special Purpose

| Domain | Name |

|---|---|

| DM | Demographics |

| DS | Disposition |

| TA | Trial Arms |

| TE | Trial Elements |

| TS | Trial Summary |

| TX | Trial Sets |

Findings

| Domain | Name |

|---|---|

| BW | Body Weight |

| BG | Body Weight Gain |

| CL | Clinical Observations |

| DD | Death Diagnosis |

| FW | Food/Water Consumption |

| LB | Laboratory Results |

| MA | Macroscopic Findings |

| MI | Microscopic Findings |

| OM | Organ Measurements |

| PC | Pharmacokinetic Concentrations |

| PP | Pharmacokinetic Parameters |

| TF | Tumor Findings |

| VS | Vital Signs |

Interventions

| Domain | Name |

|---|---|

| EX | Exposure |

Key Differences from SDTM

Subject Identification

- USUBJID format differs for animals

- Species and strain information required

- Group/cage identification

Domain-Specific Variables

SEND includes nonclinical-specific variables:

- Species, strain, sex

- Dose group information

- Study day calculations

- Sacrifice/necropsy data

Controlled Terminology

SEND uses specific CT:

- Animal species

- Strain/substrain

- Route of administration (nonclinical)

- Specimen types

Planned Features

When SEND support is added, Trial Submission Studio will provide:

SEND Import/Mapping

- Support nonclinical data formats

- Map to SEND domains

- Handle group-level data

SEND Validation

- SEND-IG compliance checking

- Nonclinical-specific rules

- Controlled terminology for SEND

SEND Export

- XPT V5 format

- Define-XML for SEND

- Submission-ready packages

Current Workarounds

Until SEND support is available:

-

Manual Mapping

- Use current SDTM workflow

- Manually adjust for SEND differences

- Export to XPT

-

External Tools

- Use specialized nonclinical tools

- Validate with SEND validators

SEND Versions

| Version | Description |

|---|---|

| SEND 3.1.1 | Current FDA standard |

| SEND 3.1 | Previous version |

| SEND 3.0 | Initial release |

Resources

CDISC SEND Resources

FDA Resources

Stay Updated

XPT (SAS Transport) Format

XPT is the FDA-standard format for regulatory data submissions.

Overview

The SAS Transport Format (XPT) is:

- Required by FDA for electronic submissions

- A platform-independent binary format

- Compatible with SAS and other tools

- The de facto standard for clinical data exchange

XPT Versions

Trial Submission Studio supports two XPT versions:

XPT Version 5 (FDA Standard)

| Characteristic | Limit |

|---|---|

| Variable name length | 8 characters |

| Variable label length | 40 characters |

| Record length | 8,192 bytes |

| Numeric precision | 8 bytes (IEEE) |

Use for: FDA submissions, regulatory requirements

XPT Version 8 (Extended)

| Characteristic | Limit |

|---|---|

| Variable name length | 32 characters |

| Variable label length | 256 characters |

| Record length | 131,072 bytes |

| Numeric precision | 8 bytes (IEEE) |

Use for: Internal use, longer names needed

File Structure

Header Records

XPT files contain metadata headers:

- Library header (first record)

- Member header (dataset info)

- Namestr records (variable definitions)

Data Records

- Fixed-width records

- Packed binary format

- IEEE floating-point numbers

Creating XPT Files

Export Steps

- Complete data mapping

- Run validation

- Click Export → XPT

- Select version (V5 or V8)

- Choose output location

- Click Save

Export Options

| Option | Description |

|---|---|

| Version | V5 (default) or V8 |

| Sort by keys | Order records by key variables |

| Include metadata | Dataset label, variable labels |

XPT Constraints

Variable Names

V5 Requirements:

- Maximum 8 characters

- Start with letter or underscore

- Alphanumeric and underscore only

- Uppercase recommended

V8 Requirements:

- Maximum 32 characters

- Same character restrictions

Variable Labels

V5: 40 characters max V8: 256 characters max

Data Values

Character variables:

- V5: Max 200 bytes per value

- Trailing spaces trimmed

- Missing = blank

Numeric variables:

- 8-byte IEEE format

- 28 SAS missing value codes supported (.A through .Z, ._)

- Precision: ~15 significant digits

Numeric Precision

IEEE to SAS Conversion

Trial Submission Studio handles:

- IEEE 754 double precision

- SAS missing value encoding

- Proper byte ordering

Missing Values

SAS/XPT supports 28 missing value codes:

| Code | Meaning |

|---|---|

. | Standard missing |

.A - .Z | Special missing A-Z |

._ | Underscore missing |

Validation Before Export

Automatic Checks

- Variable name lengths

- Label lengths

- Data type compatibility

- Value length limits

Common Issues

| Issue | Solution |

|---|---|

| Name too long | Use V8 or rename |

| Label truncated | Shorten label |

| Value too long | Truncate or split |

Post-Export Verification

Recommended Steps

- Check file size - Matches expected data volume

- Open in viewer - Verify structure

- Validate with external tools - Pinnacle 21, SAS

- Compare row counts - Match source data

External Validation

Consider validating with:

- Pinnacle 21 Community (free)

- SAS Universal Viewer

- Other XPT readers

FDA Submission Requirements

Required Format

- XPT Version 5 for FDA submissions

- Define-XML 2.1 for metadata

- Appropriate file naming (lowercase domain codes)

File Naming Convention

dm.xpt- Demographicsae.xpt- Adverse Eventsvs.xpt- Vital Signs- (lowercase domain abbreviation)

Dataset Limits

| Constraint | Limit |

|---|---|

| File size | 5 GB (practical limit) |

| Variables per dataset | No formal limit |

| Records per dataset | No formal limit |

Technical Details

Byte Order

- XPT uses big-endian byte order

- Trial Submission Studio handles conversion automatically

Character Encoding

- ASCII-compatible

- Extended ASCII for special characters

- UTF-8 source data converted appropriately

Record Blocking

- 80-byte logical records

- Blocked for efficiency

- Headers use fixed-format records

Next Steps

- Dataset-XML - Alternative export format

- Define-XML - Metadata documentation

- Exporting Data - Export guide

Dataset-XML Format

Dataset-XML is a CDISC standard XML format for clinical data exchange.

Overview

Dataset-XML provides:

- Human-readable data format

- Full Unicode support

- Embedded metadata

- Alternative to XPT binary format

When to Use Dataset-XML

| Use Case | Recommendation |

|---|---|

| FDA submission | Use XPT (required) |

| Internal data exchange | Dataset-XML works well |

| Archive/audit trail | Good for documentation |

| Non-SAS environments | Easier integration |

| Full character support | Unicode capable |

Format Structure

ODM Container

Dataset-XML is based on CDISC ODM (Operational Data Model):

<?xml version="1.0" encoding="UTF-8"?>

<ODM xmlns="http://www.cdisc.org/ns/odm/v1.3"

xmlns:data="http://www.cdisc.org/ns/Dataset-XML/v1.0"

FileType="Snapshot">

<ClinicalData StudyOID="..." MetaDataVersionOID="...">

<SubjectData SubjectKey="...">

<StudyEventData StudyEventOID="...">

<ItemGroupData ItemGroupOID="DM">

<ItemData ItemOID="STUDYID">ABC123</ItemData>

<ItemData ItemOID="USUBJID">ABC123-001</ItemData>

<!-- More items -->

</ItemGroupData>

</StudyEventData>

</SubjectData>

</ClinicalData>

</ODM>

Key Elements

| Element | Description |

|---|---|

ODM | Root container |

ClinicalData | Study data container |

SubjectData | Per-subject data |

ItemGroupData | Domain records |

ItemData | Individual values |

Creating Dataset-XML

Export Steps

- Complete data mapping

- Run validation

- Click Export → Dataset-XML

- Configure options

- Choose output location

- Click Save

Export Options

| Option | Description |

|---|---|

| Include metadata | Embed variable definitions |